What the UK's Consultation on AI and Copyright Means for the Games Industry | AI and Games Newsletter 29/01/25

A seemingly complex issue, that in practice should be rather simple.

The AI and Games Newsletter brings concise and informative discussion on artificial intelligence for video games each and every week. Plus summarising all of our content released across various channels, like our YouTube videos and in-person events like the AI and Games Conference.

You can subscribe to and support AI and Games on Substack, with weekly editions appearing in your inbox. The newsletter is also shared with our audience on LinkedIn. If you’d like to sponsor this newsletter, and get your name in front of our audience of over 6000 readers, please visit our sponsorship enquiries page.

Hello all, welcome back to the

newsletter. This week is a hefty topic, as I dig into the UK government’s consultation on copyright and the impact of relevant legislation and protections on the creative industries - which of course includes games!Oh boy, this is a big one! I break it down as best I can, while also providing all the information you need to respond to this yourself if you so wish.

But first things first, let’s quickly do the announcements, and other stuff in the news!

Follow AI and Games on: BlueSky | YouTube | LinkedIn | TikTok

Announcements

The first backer update for the Goal State Kickstarter went live last week. This is, first and foremost, for everyone who has backed Goal State since back in December, but it will also be available to subscribe to for everyone else if they so wish. Note that I accidentally set up this new ‘section’ of the site with everyone subscribed - rather than the opposite, which was my intention. I’m so sorry about that. If you do not receive these updates in your inbox, you can adjust your notifications in your account settings. Don’t forget that late pledges are still live now on Kickstarter! So if you like the sound of what’s coming up and want to get in there early, head over to the Goal State funding page.

With DOOM: The Dark Ages announced in the Xbox showcase last week as launching on May 15th, it reminded me that I had planned to re-release my case study on the 2016 reboot of the series (which Dark Ages is a prequel to). So yeah, that’s live now to check out.

The 2025 Guildford Games Festival is only two weeks away. Looking forward to being on a panel at that event.

Game AI Uncovered Vol 3 is on sale now! Use discount code DIS20 for 20% at checkout at the publisher Routledge’s website. You can also buy it on Amazon.

The 2025 AI and Games Summer School (not owned by us, but we’re all friends) is now open for registration, with early bird tickets available until March 1st. This year it’s taking place in Malmo, Sweden, with speakers from the likes of Massive Entertainment, EA SEED, Tencent, and King. Head over to their website now for more info.

A reminder that I’m organising meetings for GDC 2025! Hit the button to book in a time slot if you want to meet across the week.

AI (and Games) in the News

Some AI-related headlines from across the games industry this past week.

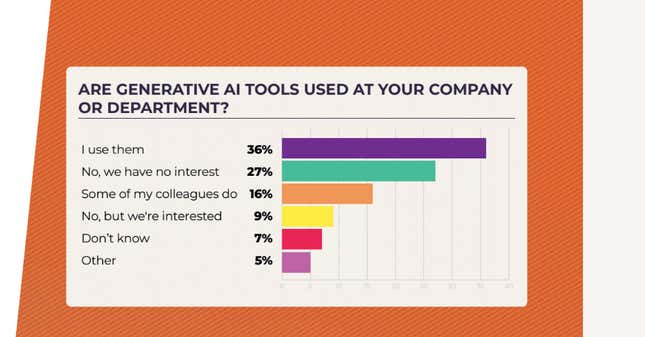

GDC’s Developer Survey Shines a Light on Generative AI Adoption

Last week the Game Developer’s Conference published their 2025 State of the Games Industry report, which is something of a reflection of the current state of things with regards to the games industry. Not surprisingly, after it made headlines in 2024, generative AI is once again part of the conversation.

Now I say something of a reflection, given that its always important to give context to who actually responds to the survey. Shout-out to Laine Nooney on BlueSky whose thread below does a great job of pointing out that, much like Unity’s report which I took a swing at near the end of last year, it doesn’t really reflect day-to-day game devs. Given 32% of respondents were indies, while only 15% of AAA, and 10% AA. Meaning the remaining 43% of respondents were academics, co-development, investors, consultants and whatever ‘Other’ is. So yeah, it’s important not to treat these numbers as gospel given they’re not reflective of the game dev community - though in this instance, I suspect they'd be even higher if they did.

While the full survey is pretty interesting, with key highlights being challenges in funding, PC growing as the primary platform, and apathy towards live service, for us the main thing was generative AI adoption.

There’s a lot of things to unpack here, but some quick highlights are that the highest levels of generative AI tool adoption in games studios were in business and finance (50%) and in community and marketing (40%). Meanwhile the overall enthusiasm for AI tools dropped from 21% to 13% from 2024 to 2025.

This doesn’t surprise me, given most generative AI tools that are of any real use work well in mundane administrative tasks rather than in creative spaces, and as we’ve discussed at length already there are still a lot of issues for their broader deployment in creative workflows.

I’m going to return to this survey in a future newsletter to dig into it some more.

Capcom Acknowledges They’re Exploring Generative AI for Ideation

In a recent interview with Google Cloud, Capcom’s technical director Kazuki Abe speaks about their adoption of generative AI tools. Like many other game developers I speak to and work with, they’re focussing on prototyping and ideation, to a point they built a pipeline in Google Cloud for the purpose of generating descriptions of potential game objects that reflect the in-world lore and setting.

The example given in the article is of a television in the game world. The idea being to speed up ideation on generating the brand of TV manufacturer and their logo, the overall design of the TV and it shape and aesthetics, through use of a generative AI pipeline. As you can see they have sample outputs of other artefacts such as an arcade machine.

But critically, the concept is to turn this into a tool for developers that helps them quickly iterate on an idea. But once the idea is fleshed out, they no longer use the generative tool and, in the example of the arcade machine above, an artist would go away and build it from scratch in a way that fits the game and pipeline they’re working within.

It’s an interesting idea, given it suggests they’re looking to support developers with an AI tool. Though it’s too early to determine how cost effective or practical something like this is in production. Plus, it’s worth highlighting that these tasks are often the ones given to more junior artists on games projects prior to being moved onto more ‘critical’ aspects of art production. So what impact this could have on the development of talent, well that’s a longer conversation. You can read the full interview (in Japanese, with Google Translate) here.

Alien: Isolation Mod Tool OpenCAGE is now Available on Steam

For much of my YouTube audience, I am still largely known as ‘the guy who made videos about Alien: Isolation’. And while I’m not done yet (the retrospective is in the edit right now), it’s worth highlighting that a bit part of how I made my second video on the topic was courtesy of the OpenCAGE mod by Matt Filer. Amazingly, the mod tool is now available on Steam directly. Making it even easier to get in there and play with it yourself.

AI People Has a Level Editor Now…

You may recall my newsletter issue discussing my playtime with AI People, a Sims-like that relies on generative AI for conversation and behaviour. Well, the recent 0.40 update now includes a bunch of new features, but notably a level editor! Check out the trailer below. They also added two new characters, one being an interpretation of GoodAI’s founder Marek Rosa, and the other a pastiche of the newly elected American President. Great…

DeepSeek Strips Over $1 trillion from the US Stock Market

A story that is highly relevant to today’s conversation, was the release of a new LLM-based app by Chinese start-up DeepSeek. An AI assistant akin to ChatGPT, that has showcased a level of quality and capability akin to ChatGPT but with a fraction of the overall performance.

This ties into my point in my predictions for 2025 of smaller more capable generative models. Given DeepSeek’s R1 model is free, can run locally (and therefore the data is private and not being fed elsewhere) and can get a decent level of performance on modest home-based hardware.

This has had a devastating effect on the US stock market, as many companies have advocated for a ‘go big or go home’ approach to building AI models, and DeepSeek suggests that might not be necessary. As a result, stocks and company value have dropped across the board, notably Microsoft (lost $7 billion), Google (lost $100 billion), and critically Nvidia who saw the biggest one-day drop in US stock market history with shares dropping 17% and losing $600 billion in value. In one day.

Ubisoft Shuts Down Ubisoft Leamington, with 185 Layoffs Across Multiple Studios

While I’m happy to see the DeepSeek news fit with my predictions for 2025, this one I’m not pleased to be proven right about. As mentioned a couple weeks back, I said layoffs would continue across the games industry as companies struggle with economic stability. Critically, I listed a handful of companies to watch for bad news on the horizon, and Ubisoft was one of them.

On Monday came word they’re laying off 185 employees across their Dusseldorf, Stockholm and Reflections studios - which includes the complete shuttering of Ubisoft Leamington in the UK. It really sucks, and I’ve had the pleasure of covering some of the work by the Leamington team over the years, notably on Tom Clancy’s The Division (see the video below). Best of luck to all those affected, and if there’s any jobs and related info readers can share to help feel free to drop me a line or leave a comment.

AI and Copyright: A Consultation

For this week our big issue is the current consultation from the UK government on the future of copyright in an age of artificial intelligence. For this issue we’re going to dig into the following:

What this consultation is exploring, and why it is relevant right now.

The overarching issues it touches upon in current AI developments.

What relevance this has to broader UK government policy.

What relevance this has to creative industries, and specifically video game development.

How the UK should be responding to the current AI climate.

Disclaimer: Given we’re getting into the weeds on a variety of laws and legislation it’s worth me reminding you all that:

I’m not a lawyer!This is not legal advice!

Rather, I’m simply someone with a lot of experience in AI within the games industry who finds himself immersed in this space all too often nowadays. So yeah, please bear that in mind!

About the Consultation

So what is this about? Well to begin with the UK government, like many others, will open up consultations to assist with their decision making as they seek to take action in a number of circumstances. This can range from the formation of political policy, to investment, regulation, but also broader engagement with existing civil and business groups around pertinent issues. There’s also an element of transparency, in that it helps give the public some insight into what the acting government is showing interest in, and what their perspectives are on the issue.

This particular consultation opened to the public on December 17th, and has proven to be highly contentious since its publication. The crux of the call is to discuss the efficacy of the UK’s legal framework, notably around copyright and the ownership of creative assets, and how that sits with the burgeoning future of artificial intelligence. More specifically, it’s about the training and development of generative AI models like OpenAI’s GPT and DALL-E, Google’s Gemini, and Stability AI’s Diffusion models that rely on data in order to generate the text, images, and other artifacts that they produce.

It speaks to the legal headache that has emerged over the past few years as corporations have raced to build these ever larger AI models, and relying on vast quantities of data in the process. The internet being such a vast space of available information from which to scrape said data, has then led to a variety of legal cases and responsive action the world over, as individuals and corporations across the creative industries challenge what right these companies have to exploit their material in service of some broader, undefined AI vision.

The Consultation, In Short

The consultation has over 50 questions that explores the broader issues surrounding the utilisation of copyrightable materials for the training of AI systems. To quote the consultation description:

Copyright is a key pillar of our creative economy. It exists to help creators control the use of their works and allows them to seek payment for it. However, the widespread use of copyright material for training AI models has presented new challenges for the UK’s copyright framework, and many rights holders have found it difficult to exercise their rights in this context. It is important that copyright continues to support the UK’s world-leading creative industries and creates the conditions for AI innovation that allows them to share in the benefits of these new technologies.

The consultation is then looking to address three key issues (in my own words):

Clarity on the rules surrounding how AI developers can utilise copyrighted materials.

Improving the protections creatives have on where and when, if ever, an AI developers can utilise copyrighted works. In the event they are used, what compensation if any can be attributed to the materials adoption.

Finally, ensuring that AI developers have access to “high-quality material” to train leading AI models from within the UK.

So this is very much leaning into the current discourse, and the issues that surround the largest developments in generative AI. Perhaps unsurprisingly, this has not been well received by creatives due to the implications throughout. Notably, that the genie is well and truly out of the bottle, and that generative AI - by virtue of its design - requires data generated by humans in order to reflect that data on some level or form (i.e. it reads text written by humans, such that it can generate text like humans do). Note however, that this consultation is not about whether are not an AI asset can attain copyright, that’s very much a separate issue - and one that I sincerely doubt a broader consultation would make sense for.

The implication that generative AI is here to stay is clear across those three issues. While it’s understandable that people may not like it, it is a reasonable stance to take and it is in the governments interests to provide a legal framework for what is deemed permissible, and that protections be applied to the creative industries within the UK.

But of course, it’s worth understanding that this is about far more than just what’s happening in games and other creative disciplines.

The Background: AI and UK Government Policy

This consultation is being driven by the newly elected Labour government in the UK, who back in December announced what they called their ‘Plan for Change’, a series of missions they have set for themselves to address many of the issues impacting British society.

The ‘Plan for Change’ is broken down into six areas:

Strong Foundations: For the rest of the governments strategy to prove effective, they believe it relies on addressing three core areas: economic stability, secure borders, and national security.

Kickstarting Economic Growth: Between the COVID pandemic and the UK’s departure from the European Union, the economic impact continues to be felt today. Public and private investment has stagnated, inflation peaking at 11%, the country’s debt reaching 80% of GDP, and the worst living standards in modern history with increasing income inequality. As such, the government wants to deliver growth to businesses, address the geographic inequalities within the UK, drive innovation in various areas of science and technology, and provide better training, pensions and job security.

The National Health Service (NHS): The public health system has seen significant challenges over the past decade due to neglect and mismanagement. Labour’s immediate priority is the waiting lists which have reached record highs. But also tackling systemic issues that lead to healthcare to begin with.

Safer Streets: To improve public confidence in the criminal justice system by addressing crime with knives and other weapons, better neighbourhood and community-led policing, and tackling issues of domestic abuse and violence against women and girls.

Barriers to Opportunity: Providing better childcare, education, and career pathways, while also tackling issues of child poverty and social welfare.

Clean Energy: Putting the UK on track to be powered by at least 95% clean energy by 2030.

So unsurprisingly, the push for artificial intelligence aligns very much with the ‘Kickstarting Economic Growth’ target, but it in truth it also has potential impact on a lot of other areas here. With the rising exploration and potential adoption of AI in healthcare, to use in crime prevention as well as ultimately providing better education in what is considered a frontier technology.

This isn’t terribly surprising. Despite the UK’s historic impact in AI research from academic institutions such as Oxford and Cambridge University, Imperial College, and the University of Edinburgh, plus the likes of chip manufacturers ARM, the UK has fell to a distant 3rd place in the AI arms race behind the US and China, with India not far behind it. Even though monumental work is conducted by the likes of Google DeepMind in the UK, it is of course a subsidiary of a US-based corporation. It’s equally unsurprising given that the previous government led by Rishi Sunak showed great interest and enthusiasm for the UK to be something of a leader on AI. Though what impact it is having on the broader conversation, notably with the EU having already made significant steps towards its only regulation and enforcement, remains to be seen.

The UK’s AI Action Plan

Since the initial announcement in December, Starmer has came forward to discuss the potential of AI and how it fits into the Plan for Change. The Government published an ‘AI Opportunities Action Plan’ on January 13th, outlining the governments perspective both on where the UK sits in the broader AI landscape, as well as the opportunities they see throughout.

This action plan has already came under criticism both for playing into the hands of large AI companies (many of whom would happily exploit the British state and deliver little in return), but also that for every good idea it has, it has another one that seems horribly naïve. While I personally applaud some of the suggestions made, notably the idea of state-controlled training datasets with regards to things like cultural artefacts and even health data, there is equally much to be concerned about. While I do think health data could be useful in numerous circumstances, it’s important it be carefully cleaned, moderated, and its purposes carefully controlled. Meanwhile the suggestion that we embrace AI wholeheartedly to address issues across the civil service and broader public services is just stupid. The idea that we can use AI to prevent potholes might sound good in a high-level meeting, when in truth we could better resource councils and community-led initiatives that can actually deliver results.

From my perspective it all feels rather rushed. We’ve suddenly heard a lot about the UK government’s interest in AI within less than two months. This is undoubtedly a concern of mine given it seems like they’re rushing into things without the proper consultation or guidance. Just this week came an investigation from The Guardian highlighting that a lot of experimentation in government departments is failing, and it strikes me as a desire - like a lot of broader commercial businesses - to be seen to be embracing AI without thinking about why they’re doing it, and what parts of their work suit AI adoption. This was a topic discussed in the GameIndustry.biz panel I participated in last summer.

Equally as concerning is that, as mentioned in both the government announcement and the press, this action plan has a lot of backing from leading tech firms - and the prototyping the government has conducted thus far has seen them collaborate with the likes of Microsoft, Google, and OpenAI. This is playing very much into the hands of big AI companies and their perspectives on how this technology should evolve - more on that in a second. But what benefit does that bring to the end customer, i.e. British citizens?

Well, it doesn’t seem like a material impact on the job market based on their contribution. There is little in the way of meaningful analysis on how AI will enable economic growth outside of the platitudes mentioned in the report.

Meanwhile as reported by

, the Prime Minister’s advisor on AI, Matt Clifford, has recently stepped down from being a member of an AI-focussed investment fund - a potential conflict of interest - after an investigation brought it to light.While it’s all well and good that we start looking at how to embrace AI as part of improving a lot of our processes, again I have to ask are we approaching it from the right perspectives and a suitably informed position.

The Action Plan & Copyright

But critically for us, the action plan makes a suggestion that the UK change the existing rules and regulations around how AI intersects with copyright and intellectual property law. To quote:

24. Reform the UK text and data mining regime so that it is at least as competitive as the EU. The current uncertainty around intellectual property (IP) is hindering innovation and undermining our broader ambitions for AI, as well as the growth of our creative industries. This has gone on too long and needs to be urgently resolved. The EU has moved forward with an approach that is designed to support AI innovation while also enabling rights holders to have control over the use of content they produce. The UK is falling behind.

AI Opportunities Action Plan, Section 1.4 “Enabling safe and trusted AI development and adoption through regulation, safety and assurance”, January 13th, 2025.

So to unpack this a little, this is referring to a 2019 directive as part of the EU’s Directive on Copyright in the Digital Single Market. Notably that the EU has specific rules on text and data-mining (TDM), i.e. the trawling of the internet to scrape information for use in data processing - which is the crux of generative AI. This directive has two exceptions that permit the ability to pursue this broadly:

It is permissible should the work being conducted by research organisations and cultural heritage institutions.

Any beneficiary can apply data for any manner of use, but can be overridden by an opt-out, which relies on the copyright holder declaring they do not want their data being used for training.

Personally, this already feels like a horribly outdated set of regulations, given that TDM is now commonplace in contemporary AI development. On top of this, the notion of ‘research organisation’ is incredibly broad. Is that a university lab? A non-profit? Or the research wing of a corporation? This interestingly was not updated or touched upon in any meaningful way with the creation of the EU AI Act. Rather, it’s expected that these two sets of legislation work in tandem with one another. Given that the EU AI act does state that copyright must be protected and respected in the deployment of all AI models despite the TDM exceptions - though how that is practically enforceable (like a lot of this stuff) is not clear.

And it’s this practical application that is the crux of the issue. Notably that in the second exception, it permits any and all usage unless the copyright holder states otherwise. This approach, whereby the copyright owner has to declare they opt-out of training, means that the person who owns the asset then needs to declare they do not wish for their work to be used in AI training. This puts the onus on the creative, rather than the AI company, to ensure compliance, which can be time-consuming and frankly impossible to guarantee.

Video Games and Copyright

So what does all of this mean for the creative industries in the UK, and critically for us in game development? Well let’s start by talking about what we consider intellectual property protection for video games. Copyright protections are important to help ensure innovation without outright imitation. Games as a medium is one on which we regularly seek inspiration from within the industry (and beyond), but it’s important that any subsequent outputs not be so heavily inspired that they outright infringe on the rights of creators.

Video games are creative works, and are typically treated as single entity in the eyes of copyright, but they’re also treated as a collection of copyrightable assets. This alongside the use of trademarks help enforce the protection and adoption of assets within them. Ranging from character models to textures, storylines and lore, sound effects, and spoken dialogue, even the underlying code itself can be considered a copyrightable asset.

However the ideas of a game’s design are not enforceable in copyright. You can’t deny copyright simply because it takes an idea and adopts it for its own purposes. This would also make little sense in the games industry because entire genres can emerge off the back of a single game - like how first-person shooters were popularised by DOOM, or battle royales with PlayerUnknown’s Battlegrounds [PUBG] - and inspiration and imitation is rife among them.

This isn’t to say that legal action cannot be taken against a developer. There are many instances - notably on Steam and mobile platforms - where ‘clones’ of games emerge. They typically fall under these four categories:

The game mimics aspects of an existing title and potentially even uses some of the inspiration’s original assets to achieve that.

See games like Flappy Bird or Palworld, both of which seem to borrow heavily from the Mario and Pokémon franchises.

There’s been much conversation this past year over whether Palworld is using assets (directly or indirectly) from existing Pokémon titles.

The game is using freely accessible assets to mimic an existing game.

The Last Hope: Dead Zone Survival was pulled from Nintendo eShop after it being pointed out it was an egregious clone of The Last of Us.

The game uses existing assets from the public domain, or from a content pack, or a previous game, and then the developer seeks to sell that as a game in itself.

I’m reminded suddenly of the ‘Digital Homicide’ debacle. Ugh.

The game outright clones an existing one that then to ride on the success of it.

See the legal battle between Tetris and Mino from back in 2008.

Unsurprisingly, it’s a very grey area. But it’s one that runs rampant in games all the time. Many a games publisher has legal teams scouring the internet on a regular basis to catch violations of their copyright.

What the UK’s Proposal Means for Games

In the AI consultation, the first real questions are focussed on the adoption of a mechanism similar to the EU’s TDM policies (Sections B1 to B4 of the consultation documentation, Q4 and Q5 of the response). The way this is posited, as quoted below, suggests that the government - as is stated by the Action Plan - wants to lean towards embracing this approach.

4. Do you agree that option 3 - a data mining exception which allows right holders to reserve their rights, supported by transparency measures - is most likely to meet the objectives set out above?

(YES/NO)5. Which option do you prefer and why?

Option 0: Copyright and related laws remain as they are

Option 1: Strengthen copyright requiring licensing in all cases

Option 2: A broad data mining exception

Option 3: A data mining exception which allows right holders to reserve their rights, supported by transparency measures

Burrowing into the details, the key distinction between Option 2 and Option 3, is that the while AI training on copyrighted material is legal and permissible, in Option 3 be default a company can train against copyrighted assets that they have ‘lawful access’ for, which would mean that unless a company or individual comes out and objects to this, then simply it’s a free market for AI companies to use that material without any negative consequences. To quote the documentation:

AI developers would be able to train on material to which they have lawful access, but only to the extent that right holders had not expressly reserved their rights. It would mean that AI developers are able to train on large volumes of web-based material without risk of infringement. Importantly right holders are also able to control the use of their works using effective and accessible technologies and seek payment through licensing agreements.

This means several very big implications for game developers:

Lest you object, any aspect of a game made in the UK is available for an AI company to exploit.

The textures, the storyline, the dialogue, the code. All of these elements can be mined out of the game and used to train and this would be deemed permissible under UK law.

Publishing anything about your game makes it open to AI training.

Before the game is even out, an AI company could use your promotional art, even your trailer and scrape relevant assets for training.

The work is on the creator of the game to ensure their work is not used in AI training.

You have to put in the effort to mitigate the risk of AI training.

While as argued, it aligns somewhat with the direction the EU is taking with their legislation, none of this has been really challenged yet on a practical level to suggest this is protecting anyone.

Perhaps more critically, it leaves the UK in a situation where there is a disparity between how copyright law applies to humans as it does to AI. In that a human creator must apply for a license to use the existing works of the creator, but a machine does not.

A Bad Deal for UK Creatives

OK, gloves off. It doesn’t matter how you shake it, this is a bad deal, and one that puts the power directly in AI companies, rather than in creatives. It falls into the same traps, the same mistakes, I see so many governments and thought-leaders fall into, and that is to placate large-scale AI companies in pursuit of an outcome that, frankly, isn’t going to happen, and doesn’t benefit anyone but them.

It’s well established that large language models (LLMs) the likes of OpenAI are building need large quantities of data in order to work effectively, but for the vast majority of practical use cases for LLMs (summarising knowledge, generating new data akin to existing styles) we have more than a sufficient amount of data needed for those purposes. When you see OpenAI or Google advocate that they need more data, it’s to build their large-scale general purpose generative models - known as foundation models. This is all part of their pursuit towards ‘general purpose AI’ or ‘general intelligence’. An ideal that has existed in AI for a long time, and right now generative AI appears to be the way to reach that goal.

Now I don’t agree with this ideology given that generative models are statistical systems by design - they generate data that is statically similar to that which it was trained on (e.g. give an LLM Shakespeare, it will then be able to produce something that on the surface looks and reads like Shakespeare), and as such I do not see that as being the solution to achieving general intelligence AI given it lacks any meaningful mechanism to process or reasoning disconnected from its data. Heck even my colleague Julian Togelius, who wrote a book on general intelligence AI, is fed up of hearing people talk about it of late.

But what it provides is a convenient mechanism for the pursuit of AI to completely overrule copyright of individuals. As discussed back in the issue on the SAG-AFTRA strike, we’re in an age where the data of the individual is the most valuable asset in the digital economy. Be it your face, your voice, but also your artistic expression. There are laws in place to protect some of this, and the regulations are evolving, but the capitalists want to insert themselves into the process of building these new age regulatory frameworks because it suits them. The UK government is listening to these companies say that this data is needed both to enable for an AI future that isn’t going to happen, but will also screw over everyone else in its pursuit.

I believe that AI has its value in a lot of use cases, but opening the doors and letting corporations gut the UK hoping for some form of trickle-down economics (y’know, that economic theory that never works in practice) whereby it then somehow magically enriches every else is incredibly dangerous - and critically, it’s plain stupid!

Things like this suggestion to edit copyright law is the snowflake that starts an avalanche, in which everything is eventually up for sale. It effectively moves us towards a future where smaller creatives who lack the finances to build their legal protections are effectively punished for trying to indulge in the arts. If you seek to explore the creative arts, then you had best ensure you’ve explored all the legal implications of how your work could be exploited before you dare start.

And of course while it is argued there are protections, and it will operate much akin to the EU, we’ve yet to see how practical the unions AI provisions are. Meanwhile, this is the farthest the UK has came along with any form of AI legislation - with no real AI regulations in sight.

All of this is creating a situation that is highly undesirable for the UK creative sector, and at a time where many corners of it continue to struggle post-Brexit. Feels like a slap in the face. It feels very much like a Conservative policy dressed in Labour’s clothes.

What The UK Should be Doing

At a time when OpenAI is fighting lawsuits left and right as a result of copyright infringement (the latest coming from Indian news outlets), when a couple weeks back Anthropic had to add protections against song lyrics being exploited, when none of these corporations can actually point to any material profit being made from their AI provisions, now is not the time to placate them, but rather to build ecosystems that support healthy and safe innovation of this technology.

The UK has an opportunity to exist in a sensible middle-ground. Rather than going the route of the bigger-and-better approach of the US, to show how to do things in legal, ethical, and practical ways. Investing in companies that build AI systems that are in-line with our climate change objectives of hitting net-zero by 2050. By looking at how to build smaller large language models - annoying abbreviated to SLMs, and not to be confused with just old-school language models - that can run on-device, and are more performant. Rounding that out with a practical copyright infrastructure would help show the UK as punching above its weight by using our existing expertise to deliver smart, sensible AI tools and systems that in-turn - by virtue of our copyright systems - would more or less guarantee compliance with much of the broader EU AI regulatory framework.

Don’t Believe What OpenAI Is Selling

OpenAI and their ilk have tried to sell to the world that we need to give them data to build these systems or they’re not profitable. I mean they’re not profitable anyway, because GPT is a product without a market. And Nvidia has most certainly capitalised on that by cornering the market on AI compute hardware. But there are many ways in which generative AI can be built without it that still suits our needs.

As

discussed on his own Substack, OpenAI is a house of cards built atop the most expensive tech stack in human history, but is one that has no real defining unique selling point. It was the company that helped showcase this technology works, but other companies are going the route of innovating on the technology. Making better models for specific purposes, making smaller models.I can train an LLM on my laptop, with open source data and models, and not run risk of litigation. I can train an AI model to suit a very specific purpose without infringing on the rights of others, clogging up the legal system with these damaging acts, and this brings it back to the creatives. The UK Government should aim to become one of the first nations in the world to start more aggressively defining the rules, regulations and processes of how generative AI can exist in modern society - taking a page from the EU’s AI Act. After all, if this data is so valuable to AI companies, they should be paying for it. They should be operating within defined rules of law that support the very creative industries that they rely on so much for their work to develop.

Open-Sourced Serendipity

I mean the serendipity of the DeepSeek news is just insane. I had literally finished writing this article on Monday (27th January) after slowly chipping away at it since I got back to my desk at the start of the month, only for my actual point of challenging the US perspective on AI to be reassured so drastically in the stock market.

This technology is still developing, and as I’ve intimated the approach taken by the US of bigger/more powerful is not sustainable - be it financially, ethically, or in context of climate either. Yes we’re aware that bigger models often yield more powerful results, but in truth the gains are being minimised with each new iteration and the costs simply aren’t practical.

This is why DeepSeek has caused such a furore, given it’s not just smaller than GPT, it’s outperforming it at most levels, and is significantly cheaper! Naturally it’s early days, but in just one day it has not only challenged the US strategy it has fundamentally weakened it. This is why the stock market is reacting so aggressively, as the entire US approach of ‘more, more, more’, is now being questioned.

I’m no expert in LLM development, I’ll be the first to admit that, but it was obvious to me that this was the only viable direction in the long-term. We’d start figuring out how to do more with less. The UK should be embracing that, and working with relevant hardware partners like Arm to build out the UK’s capabilities.

Opt-In is the Only Sensible Way Forward

Lastly, returning to the actual copyright issue, an opt-out approach is laziness to the highest order: it places the labour on the creative, who should just be creating by default. As I said earlier, the governments stance is incredibly Conservative (note the big C) in nature, in that it seeks to absolve the state of responsibility. I find that frustrating. It also conveniently absolves the AI companies (many of whom have the financial security to dedicate time and resource to building out licensing agreements) from having to deal with this work.

An opt-in system means that by default the work of the creative industries are protected. The law should continue to work to protect British citizens, and support the creative sector by providing guidance on how licensing should work for creatives at various sizes across different industries.

I will take a second to clarify that, yes TDM exceptions should continue to be permissible. It is important that we find ways to build accessible datasets everyone can use for specific non-commercial purposes. But this should only be made available to research institutions and that should have stricter regulation on how this works. After all, I’m sure OpenAI still advocate that they’re just a research organisation at this point.

Submit Your Response

You can submit your own response to the consultation via the Intellectual Property Office’s website. Here’s some relevant links:

The consultation closes in less than a month’s time on the 25th February. Let me stress having done it myself, that the form you complete is lengthy, and so I would set aside at least an hour of your time to work through all of the questions.

In addition, if you’re based in the UK you may wish find out (assuming you don’t know already) who your local member of parliament (MP) is, and write to them on the subject.

Wrapping Up

Wooft! That was a heavy issue this week - not exactly fun and upbeat, huh? So yeah, next week is our monthly Digest issue, and then for the next full-blown deep-dive, we’re going to do something fun! My long overdue post-mortem of my time attending a research seminar at Schloss Dagstuhl in Germany last summer on the future of AI for game development!

Plus, tune-in next week as our first batch of videos from the 2024 AI and Games Conference will be going live on our new dedicated YouTube channel!

Thanks for sticking it out this far, and I’ll see you all again soon!

Some extremely naive takes in this article.

"I can train an LLM on my laptop, with open source data and models" => do you have any idea how false this is? You obviously have not tried to do this. Yes, you can train an LM, but not a LLM, or anything remotely useful. "open source models" yees these exist e.g. LLaMa, but are also massive and trained to the detriment of the environment etc - frankly you have no chance of finetuning even these on a laptop. The weights alone of LLaMa 3 are 16GB, and training will require even more VRAM.

A product without a market? How come OpenAI is earning *billions* in revenue each year then? Yeah they're making a loss, but the product is absolutely useful - nowhere near as much as advertised, I agree, but it absolutely helps with writing code amongst other stuff.

Unfortunately, it IS necessary to have a ton of data to produce LLMs (yes this is a fundamental issue with deep learning), but even if you don't see the point, many others do. If you don't allow companies to steal everyones IP to train on, China *is going to do it*, and good luck taking DeepSeek to court to complain when they're not based in the UK.

IMO people care way too much about their stuff being trained on. If you are an indie game dev and your art is added to a training dataset, how does this actually negatively impact you in any way? Your art is such a small drop in the ocean of training data, it's not like everyone will suddenly be reproducing your style. The vast majority of training data is stock images etc that nobody cares about, artists complain way too much about this.

I enjoy your newsletter, but please stick to talking about topics you have a clue about.

For anyone who wants to add to the UK government's consultation on copyright 'protection' (i.e. no protection at all) against AI companies' exploitation, the ALCS has a brilliant guide to help you unpick the questions:

https://www.alcs.co.uk/news/your-rights-are-under-threat-from-ai-its-time-to-have-your-say/

British citizens can respond to the survey here:

https://ipoconsultations.citizenspace.com/ipo/consultation-on-copyright-and-ai/consultation/