AI and Games is made possible thanks to our paying supporters on Substack . Support the show to have your name in video credits, contribute to future episode topics, watch content in early access, and receive exclusive supporters-only content on Substack.

If you'd like to work with us on your own games projects, please check out our consulting services provided at AI and Games. To sponsor our content, please visit our dedicated sponsorship page.

As developments in generative AI have continued at a breakneck pace, we've seen an increasing number of these projects crop up in various contexts. You can interact with them, you can influence how they behave, and while if you squint your eyes a little it might seem like you're playing a video game, these are AI models trained to simulate the behaviour of video games.

But while there's been a lot of hype on the internet suggesting these are the first steps towards creating video games without the use of game engines, the reality is far more complicated. These 'games' are expensive to make, difficult to be consistent, require tremendous compute resource to train, and in some cases were not even built with the idea of being playable games in mind.

I'm

, this is , and it's time to get to the bottom of these AI game simulations. Let's summarise what this technology is all about. Combat some of the false narratives that have quickly emerged around them, and discuss these projects in a little more detail.AI Simulated 'Games'

As we've seen in the past few years, generative AI enables for reproduction of assets such as text, images, and audio at levels of fidelity that were not previously possible using existing AI techniques. These models, be it large language models like GPT, Llama or Claude, or the likes of image generators like DALL-E and Stable Diffusion, build statistical representations of the data is trained on. This means that GPT learns to write text that seems sensible, because it has seen hundreds, thousands, if not millions of similar examples. But ultimately it doesn't understand what you asked it, nor does it understand what it told you. Instead it generates the most statistically likely answer to the prompt it is given.

As generative models have improved, it's enabled for AI generated videos. A natural extension of image generation that also factors how images relate to one another over time. And now this work has gone one step further. Video generators like OpenAI's Sora generate in chunks at 60 seconds at a time, but these AI ‘games’ as it were, are not just compositing video on a frame-by-frame basis, it's also factoring in user input between frames. This in essence makes them look like playable games, when in truth they're more like interactive videos based on a simulation of how those games work - that as we'll see shortly cannot be maintained for long.

The three we're going to focus on today are:

GameNGen: a neural model published in August of 2024 showcased by running the original DOOM from 1993.

Oasis: a project released in October 2024 that simulates playing Minecraft.

Muse: a model for simulating gameplay of Bleeding Edge, published in February of 2025.

Now the other major example of this is 'Genie', a generative model which was published by Google in February of 2024. Genie is a more general purpose foundational model built for simulating virtual worlds based on a particular prompt and does so by looking at copious amounts of video data. While very similar to these projects in principle, we're not going to focus on that in todays video.

As we jump into this, it's worth addressing the AI-generated elephant in the room, in that regardless of the hype and noise surrounding these projects, they're not practical for day-to-day game development. And while there is a lot of apathy and frustration surrounding a lot of generative AI - given the hype muddies the waters when we talk about how AI is actually used in game development - we’re covering this topic on

because it's worth discussing what these systems are actually doing, and why they're interesting from a research perspective. I want to give you - our audience - a better understanding of not just what these models are doing, but why they're not going to change how games are made anytime soon. While Oasis and GameNGen suggest this to be a future reality, that was never the intent of Muse, but it was lumped in with these games in the broader narrative courtesy of how it was marketed. In the meantime, if you want to know how we use AI today, be sure to check our AI 101 article on that subject.Learning Games v2.0

If I may disconnect but briefly from the AI hype, these AI models are, from a research perspective, really interesting in that they're taking an idea that has sat within much of the work we see on machine learning for games, and even the stuff we've covered on this channel, but expressing it in a different way.

If you consider some of the big stories surrounding machine learning in games in recent years, such as Gran Turismo 7's ‘Sophy’, to Dota 2's Open AI Five, or the AlphaStar system for StarCraft 2, in each case they're learning to play a game. But in order to play a game, they need to both build an understanding of how that game operates, as well as how people act within it. As discussed in my analysis of GT Sophy, the AI driver has to factor how to drive the car in the conditions of the track while also respecting the rules of driver etiquette that Polyphony and the broader Gran Turismo community expects of its drivers. In our episode on Little Learning Machines, the NPCs you control have to learn how the game works, and then act based on the rules you prescribed to it. But we've also seen work where AI learns to excel at a game because it is given vast quantities of data to build upon. In our breakdown of Forza's Drivatar we discussed how it learns to race by observing the player's previous efforts. Meanwhile in our issue on AlphaStar, it learns the fundamentals of StarCraft by using imitation learning to mimic the behaviour of professional players through replay data.

My point is that, in all of these cases, the AI system learns the rules of the game, either by playing the game itself or by observing how humans play the game. This is of course something humans do on a regular basis. We either pick a game and give it a crack, or we watch people play a game next to us on the sofa, or on livestreams on YouTube or Twitch, to get a feel for how that game works and how others play that same game. We build an understanding of different systems in the game - like how NPCs behave, or the physics of the world - as well as how our actions will influence the game. We don't necessarily understand *exactly* how that game works - unless of course you actually worked on the game - but we build a good enough approximation that we can imagine how things will pan out in certain scenarios, and can use that knowledge to y'know, maybe have some fun...?

This is in essence what these new generative models are doing. Previously our AI learned how the game behaved, and how actions influenced it, and now these generative systems also simulate what that game looks like. In all of these earlier examples, we filter a lot of the game data to only factor the critical aspects of gameplay information. Whereas here, the model is being fed visual data as well as the inputs, and from that it builds its own understanding of the underlying game behaviour and to reflect the visuals it has seen before. They're all working on the same idea: what we call 'Next Frame Prediction', which is rather self-explanatory - by feeding the current frame and previous frames, combined with a set of input signals, can the model predict what the next frame of the game would look like?

Now you'll have noticed from the Oasis footage above, these next frames, well... they look like shit. The reason for that is the models are rendering at low resolutions. Given the amount of power (and in turn training) required to run these models will increase as the overall resolution increases. At best the GameNGen (DOOM) model doesn't look too bad, but that's largely because the original release of DOOM had a native resolution of 320 x 200 pixels. Meanwhile Muse looks like it's rendering Bleeding Edge in a GIF that was published in 1998, and that's because the main model Microsoft Research built only renders at 300 x 180 pixels.

And so with this out of the way, let's take a quick look at what each of these projects are doing, how they work, and how they were built.

Oasis (Minecraft)

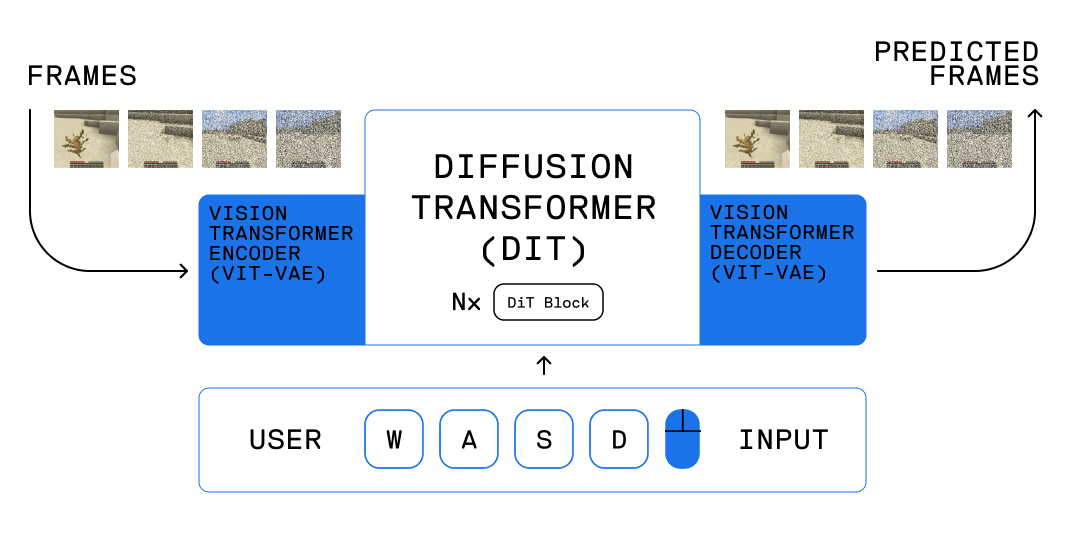

A collaboration between two US-based AI startups - Etched and Decart AI - Oasis is renders a simulation of Minecraft at a resolution of around 360p, and running at 20 frames per second. You can play it via a browser, and only a browser because as we'll see in a moment, almost nobody reading this could afford to run it on their local device.

Oasis is achieved using a custom AI architecture that is really reliant on two specific things. The first is a spatial autoencoder, which looks at the input it receives and then tries to learn a spatial representations of it: meaning that it can recognise objects, understand movement and navigation and critically it preserve positional data such that it can recognise where it is in the world.

But the real secret to how this works is it uses a combination of diffusion, which is used for image generation to learn to recreate images, and transformers - not the robots in disguise, this is the neural network architecture used in large language models like GPT, which learn context. Through this it can begin to understand relationships of how things should work, such as how physics operates, or dynamics like the creation and destruction of blocks based on user interaction. It then renders each individual frame of the game as a single image, by recalling both recent frames as well as reading in the user input.

But where did it learn Minecraft from? Decart have been less than forthcoming about this, only stating that it was trained on "millions of hours of gameplay footage". Which I think is a roundabout way of saying they probably scraped thousands of lets play videos off of YouTube. But thus far nothing else has been confirmed.

When I tried it out in March of 2025 for this article, it was time limited to around one minute, and the experience itself is very limited beyond this temporal constraint. While the model has got to grips with certain gameplay aspects such as creating and destroying blocks, it struggles with maintaining context. As you can see from the footage it frequently forgets where you are, more often than not when your vision is blocked by local geometry, and then it winds up thinking you're in a different environment, and as such you can simply turn around and find yourself in an entirely new environment. This is because the model can only retain context within a short window, and so it's easy for it to forget recent history, and ultimately generates a different scenario that is equally plausible based on the current observations that it has.

It's generous to say that this is an AI version of Minecraft worth playing, and of course Decart say that this is but a proof of concept, a demo at best. But it's not really a demo to showcase how games can be powered by AI, but rather an exercise in showcasing the capabilities of hardware used to run real-time interactive AI models. While you can download the model weights of a downscaled version of the model, to achieve that 360p resolution at 20 frames per second you'd need to deploy it on an H100 - NVIDIA's dedicated GPUs for running AI compute loads, with the Hopper architecture built to support speed-up of performance of transformer architectures. This is cool and all, until you jump around the internet and realise that an Nvidia H100 80GB add-in card will set you back at least $30,000.

But the real story is that Decart optimised the model for running on Etched's new Sohu chip, which they believe will enable 4K render quality at a more cost-effective level. So it's worth pointing out that all of this is really a means to highlight one company's AI tools, and another's AI hardware, all so you can play what is unquestionably the most expensive version of Minecraft that's ever existed.

Or well, actually calling it Minecraft is perhaps not accurate. Given the whole project is legally rather ambigious. Microsoft themselves have stated that this is not an officially sanctioned or approved Minecraft project.

GameNGen (DOOM)

Just a few months prior to Oasis, a team of researchers at Google announced GameNGen (pronounced 'Game Engine'): an AI model that - as stated in the research paper published on Arxiv - is aiming to "real-time simulate a complex game at high quality". Unlike Oasis, which as is advertised as an "experiential, realtime, open-world AI model" by its developers, the research team working on GameNGen very much refer to their work as an AI game, given it's being powered by an "AI engine". To quote the paper:

"We show that a complex video game, the iconic game DOOM, can be run on a neural network (an augmented version of the open Stable Diffusion v1.4 (Rombach et al., 2022)), in real-time, while achieving a visual quality comparable to that of the original game. While not an exact simulation, the neural model is able to perform complex gamestate updates, such as tallying health and ammo, attacking enemies, damaging objects, opening doors, and persist the game state over long trajectories. GameNGen answers one of the important questions on the road towards a new paradigm for game engines, one where games are automatically generated, similarly to how images and videos are generated by neural models in recent years. Key questions remain, such as how these neural game engines would be trained and how games would be effectively created in the first place, including how to best leverage human inputs. We are nevertheless extremely excited for the possibilities of this new paradigm."

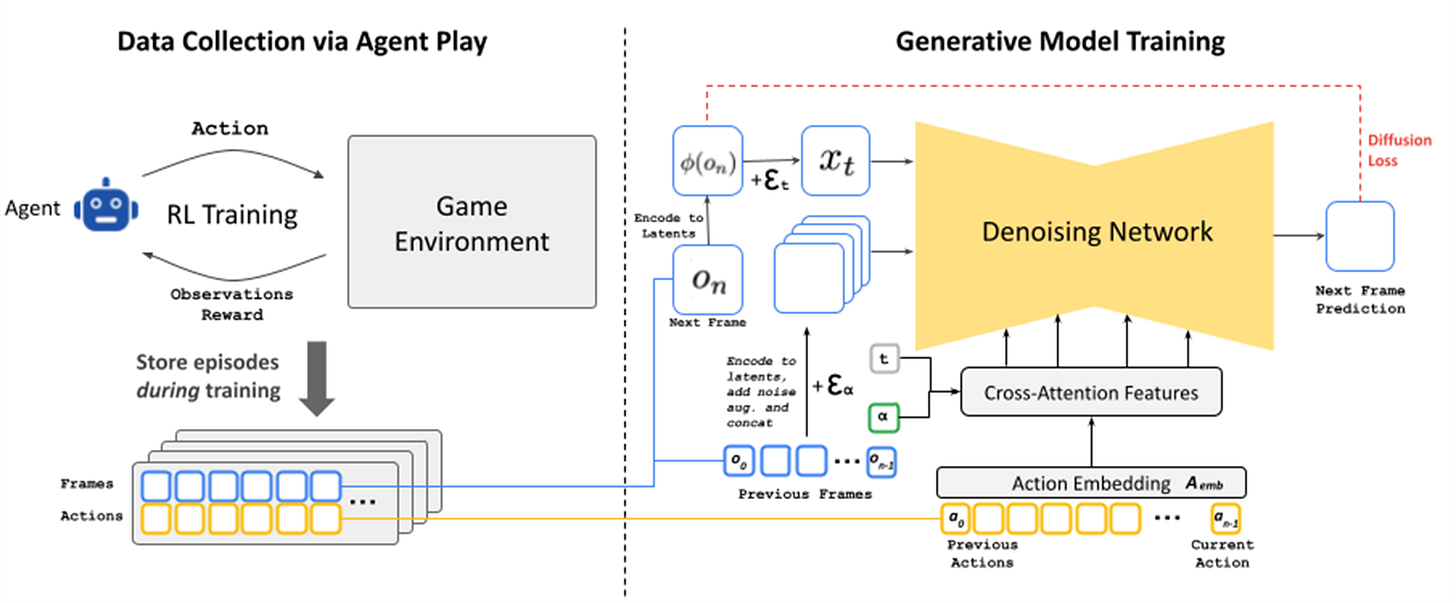

Rather than scrape videos off the internet, the team trained a bot using reinforcement learning. They train an agent by giving it a set of reward values on what it should and should not do when playing the game. So it scores positive rewards when it hits or kills an enemy, finds new areas of the map (bonus for secret areas), finds new weapons and is encouraged to keep its health, armor and ammo at good levels. But then it is punished if it gets hurt or of course, if it dies. This the one part of the entire process that is unique for DOOM, meaning that if they wanted to try this again on another game, then they need to come up with a different set of reward values for the bot to learn a policy specific to that game.

While the bot was playing the game, they stored the rendered frames and the actions that the bot took on that frame. So the collection of game frames, combined with the input actions, are what are then fed into the model to learn from. Unlike Oasis, which as mentioned relies on a transformer, this is running on a modified version of a pre-trained Stable Diffusion image generation model.

As we can see throughout the footage, much like Oasis, GameNGen struggles to retain aspects of its own history. The footage is generally sharper and more consistent (again, it's DOOM, a low-resolution game), and the model does a better job of remembering the map layouts. But given that the model only has a context memory of three seconds of gameplay, it's still quite easy for it to get 'lost' in its own simulation of the game, and invent enemies or items that were not there before

But what of the cost? Well, this model was tested by running on once of Google's 5th generation TPU chips, which you can't buy, but you can rent them from Google themselves! Meaning that playing DOOM in 2025 on an AI model would cost you anywhere from $1.20 to $4.20 an hour depending on the TPU model and where in the world you are if you wanted to run it.

Again, this is legally ambigious, given as far as I can tell Microsoft didn't give permission for Google to try and clone their IP with AI models.

Muse (WHAM for Bleeding Edge)

In February of 2025, Microsoft made headlines with the announcement of 'Muse', a collaboration between Microsoft Resarch and game developers Ninja Theory to build a generative model that can simulate the behaviour of their online shooter Bleeding Edge. While similar to GameNGen and Oasis, the real innovation of the work was that it can simulate how the game might change as a result of making small modifications to the design and retain those changes in the simulation.

As discussed in the research paper published on Nature, the first phase of Muse was actually interviewing game developers on whether or not generative AI tools are useful when coming up with new ideas. One of the major issues with these tools, as we've seen already already in this video, is that generative models are not great at retaining context or history over time, and simply forget the original problem lest you spend a lot of time feeding the previous history. This isn't surprising, given that generative models are statistical by nature, but it's not practical for a lot creative ideation where you keep returning to the same idea, and making small iterative changes to it over time to see if they're worthwhile.

So the work in Muse wasn't just about creating a model that replicates Bleeding Edge - that's just the testbed - it was about addressing issues that game designers themselves said prohibited generative models from being useful in ideation. In my breakdown of Muse published in the

newsletter, Microsoft Research identifed three big problems that their generative model needed to address:The consistency issue I just described.

A problem of diversity, of ensuring when you reach the same location in the simulation you get varied outputs. This is particularly relevant for a game like Bleeding Edge, where you don't expect people to always play the same way each time.

And lastly persistency: in that if a change is made by a designer, does the model hold onto that change?

This last part is arguably Muse's biggest contribution, in that the trained model can take a photoshopped image of the game, where perhaps you put a jump pad where one wasn't before, or an enemy, or a health pack, and the model could not only recall that item being in the simulation, but maintained that consistency for sequences up to two minutes long. The underlying AI model is known as a WHAM: A World and Human Action model and like Oasis it's built atop the transformer architectures used in large language models. This time relying on around 7 years worth of gameplay data from Bleeding Edge that Ninja Theory could provide.

It is, on the surface at least, an interesting if not intriguing research project, that is equally weird and rather quirky in that it doesn't propose to change the games industry anytime soon - or completely change Xbox's approach to game development - but it could have value in the future. But the conversation surrounding it online has been incredibly heated, and as we move towards ending this piece I want to talk about the broader issue with all of these works, in that they're discussed in ways that simply don't align with reality.

These Are Not Real Video Games

Having now covered each of these projects in some detail, I return to my opening statement. These are not real games, and at the end of the day, regardless of the hype and noise being made about them, they can't replace games or game engines. I think the footage itself is already evident of that. While impressive, these models struggle with maintaining the complexity of the games that they have seen for long periods of time, and cannot do it quickly without massive amounts of compute power.

This flies in the face of what game engines do, they provide the tools through which a designer can build the ground truth of their experience. To decide how things work, where things should exist, and how objects interact. The challenge is then maintaining that simulation as games get more complex by retaining an efficient means to compute that truth. This is why you have occlusion culling in rendering, or level of detailing applied to textures, animations, even AI behaviours. Because the complexity of the simulation is often such that it's difficult to maintain the larger the game becomes, and as players progress for dozens if not hundreds of hours of play.

And clearly this is something that these models can achieve cannot achieve right now, and to be honest most of them aren't even trying to do that. Sure, Google proclaims GameNGen showcases that diffusion models can be real-time game engines, but as mentioned Oasis is a sales pitch for AI hardware, and Muse is really about identifying limitations of generative models such that they can be practical as an ideation tool. These are research projects, that are at best really interesting developments in the sophistication of AI models, and at worst hype blown out of proportion by press releases from AI companies who again, are trying to show they're contenders in the current AI hype. But what they're not is actual games, or game engines. And there's three big reasons for this.

The first is the aforementioned consistency issue: they're guessing how the game should play on a frame-by-frame basis based on previous playthroughs. Hence Oasis teleports you to another location like a cubic fever dream, while GameNGen is teleporting enemies in the DOOM maps - and not in the way that DOOM actually teleports enemies into play - but rather it's just adding enemies in because it's highly statistically likely that an enemy should appear based on the data!

The second, is the data and hardware. All of these models required vast amounts of data to train these models, with literal years of data often being used, and expensive to hardware to train, host or run locally. Considering the human race has spent an inordinate amount of time getting DOOM running on everything from pharmacy signs to ATMs, calculators, pregnancy tests, PDFs and word documents, pianos and even a Nintendo Alarmo, this is without a doubt the most expensive version of doom ever built.

The third and final thing, is the stability of the games themselves. Consistency issues aside, any argument made about these replacing game engines ignores one critical fact: games evolve drastically during development. However all of these models rely on games that are static and stable. Even Bleeding Edge, the most modern title in this video, has long since ceased development. It was last updated in Setpember 2020, and that makes it a perfect testbed for research because the game isn't changing such that the model would have to adapt and change every time. Meaning even more data, and more training, to keep up to date with changes to the meta.

And so critically, it's important that we all approach these ideas with a pinch of salt, and pierce through the hype that is presented. When you have Phil Spencer and Satya Nadella suggesting that Muse is the future of game preservation or game creation, it's worth remembering the one group of people who didn't say that, are the people that actually built it.

Closing

It's safe to say that we're going to see more of these AI simulations of games in the coming years, but what value they bring outside of 'weird AI project that uses games as a testbed' remains to be seen. It's safe to say that video games and the ways in which they're built are not threatened by these simulations in any meaningful way.

It's equally safe to say generative AI in games is a very hot topic at the moment - as anyone who reads my weekly newsletter will know, it's almost all I'm talking about as I challenge false narratives and try to be a voice of reason about all of this stuff. It's important we cut through the noise and discuss the facts of what these systems do, and don't, and what that really means in the grand scheme of things. Naturally you'll all come to your own conclusions, and by all means get in the comments to discuss, but as ever AI and Games is all about making this information as accessible to you all as possible.