AI and Games is made possible thanks to my paying supporters on Substack as well as crowdfunding over on Patreon.

Support the show to have your name in video credits, contribute to future episode topics, watch content in early access and receive exclusive supporters-only content

If you'd like to work with us on your own games projects, please check out our consulting services provided at AI and Games. To sponsor our content, please visit our dedicated sponsorship page.

Trying to build fun AI for a game is hard enough. But trying to build a game that teaches you how AI works is no doubt even harder.

Over the years here on

we've dug into the complexities of many of your favourite titles: how building enemies, allies, adversaries, and opponents can be a massive undertaking. Often with numerous complex systems running in the backend to deliver the experience you've enjoyed for dozens if not hundreds of hours at a time. But the AI in those games is finished. It's done. When the game ships, the AI is finalised and doesn't develop any further - bar fixing the odd bug or two of course.This is what makes Little Learning Machines so interesting: it's a game not just about training NPCs using contemporary AI techniques for in-game challenges, but the training process is reliant on players learning the fundamentals of how the underlying technology works.

For this episode I sat down to chat with some of the team at developer Transitional Forms about bringing the project to life - just as a huge content update comes to the game on September 5th. We discuss the weird and wonderful challenges they faced along the way, and how the team itself had to learn more about how machine learning actually works, in order to build a game accessible to everyone.

About Little Learning Machines

Released in early-access in 2023 and now with a full release in 2024, Little Learning Machines tasks players with solving a variety of increasingly challenging puzzles in a fun and idyllic environment. The challenges range from collecting items, navigating hazards, throwing balls, watering flowers, playing with dogs and more.

But in order to solve these puzzles, the player has to teach non-player characters known as Animos how to solve the tasks provided. You train each NPC in real-time using the dedicated training 'cloud', where players get to design their own sample problems and then configure the Animos to understand how it should solve them.

The game itself originates from the studios previous project which was actually an interactive film. Agence, released in 2020 and available on Steam has players work to maintain the ecosystem of a collection of intelligent NPCs. Players can interact with these agents to sow conflict, or try to protect them and ensure their survival, and in turn these characters will react to the players inputs.

As Agence was close to completion, the team had became enthusiastic not just about understanding more about how the reinforcement learning technology that powered the NPC creatures, but also how they can have much greater control over how the characters behaved. This led to the development of Little Learning Machines, where the team not only wanted to experiment with the idea of training NPCs as a core mechanic, but also take on the challenge of teaching players how the underlying technology works as they play it.

Nick Counter [Art Director, Transitional Forms]:

We would come into the studio in the morning and all get around the computer and see what they had learned overnight. And it was that process of seeing and being excited by new behaviours and different things, like that really inspired what we wanted to do.

We did a lot of work especially near the end of the [Agence] project that was trying to get the training more in anyone's hands [at the studio] so that we could - as much as we're not engineers or anything like that - we could influence their observations or at least have some sort of degree of influence over them. And from that immediately we [reliased] that is fun - it takes tens of hours at this point but what what if that loop could be closed? That we could make this this loop tight enough that it could be experienced right in front of your eyes? That was always kind of like the core idea even before we knew that there were robots or anything like that.

Dante Camarena [Technical Director, Transitional Forms]:

So one of the missing points in this like RL space is this idea that there's all this theory behind it, and then we often as users interact with reinforcement learning algorithms in YouTube or recommendation algorithms, but what's kind of missing is this thing that undergrad students do when you ask them to run some experiments [on how RL works].It's just like a loop of like training something, watching it improve, being like “oh how can it get better?”, and then like moulding that into an experience. We wanted to capture that, and at least it was our our original thought that [it] could only really be learned through intuition. Through doing it multiple times and like developing a sense for how these things behave.

It's very close to game design actually, in that it's an iterative process. So trying to create a space where people could experience that, and develop that skill is something that we thought was just kind of absent in games.

Reinforcement Learning as a Mechanic

So yeah, Little Learning Machines is a game all about training AI to solve problems, but I'd be remiss if I didn't point out that there are games that have explored this before. Creatures from 1996 and Black & White from 2001 are famous for being games that had NPCs where players could train and configure them over time. But it's not an idea you've seen applied to games all that often. Both the Norns of Creatures and the monsters of Black & White are powered by artificial neural networks, where they are slowly tweaked and modified over time to better suit the goals of the player. But in each case, the design of each game is more freeform, allowing you to explore lots of different ideas and manipulate these characters at your own pace, but for Little Learning Machines its quite different: they need to be able to solve a puzzle in front of you, and they need to do it quickly.

Little Learning Machines uses the same core architecture: the Animos brains operate using neural networks, but they're trained using a contemporary reinforcement learning algorithms. For those unfamiliar with the concept: reinforcement learning is a process whereby an agent learns to solve a problem through repetition, in which it receives both positive and negative signals as to how well it is performing at the problem. The problem is broken up into states, unique situations within the range of all possible problems, and in each state it apply actions. After each action, it then receives either a positive or negative signal. These signals, often referred to as rewards, help the agent understand whether it's doing something correctly, or incorrectly. The more it experiments and tries different strategies, it begins to learn the most effective actions to take in any given state.

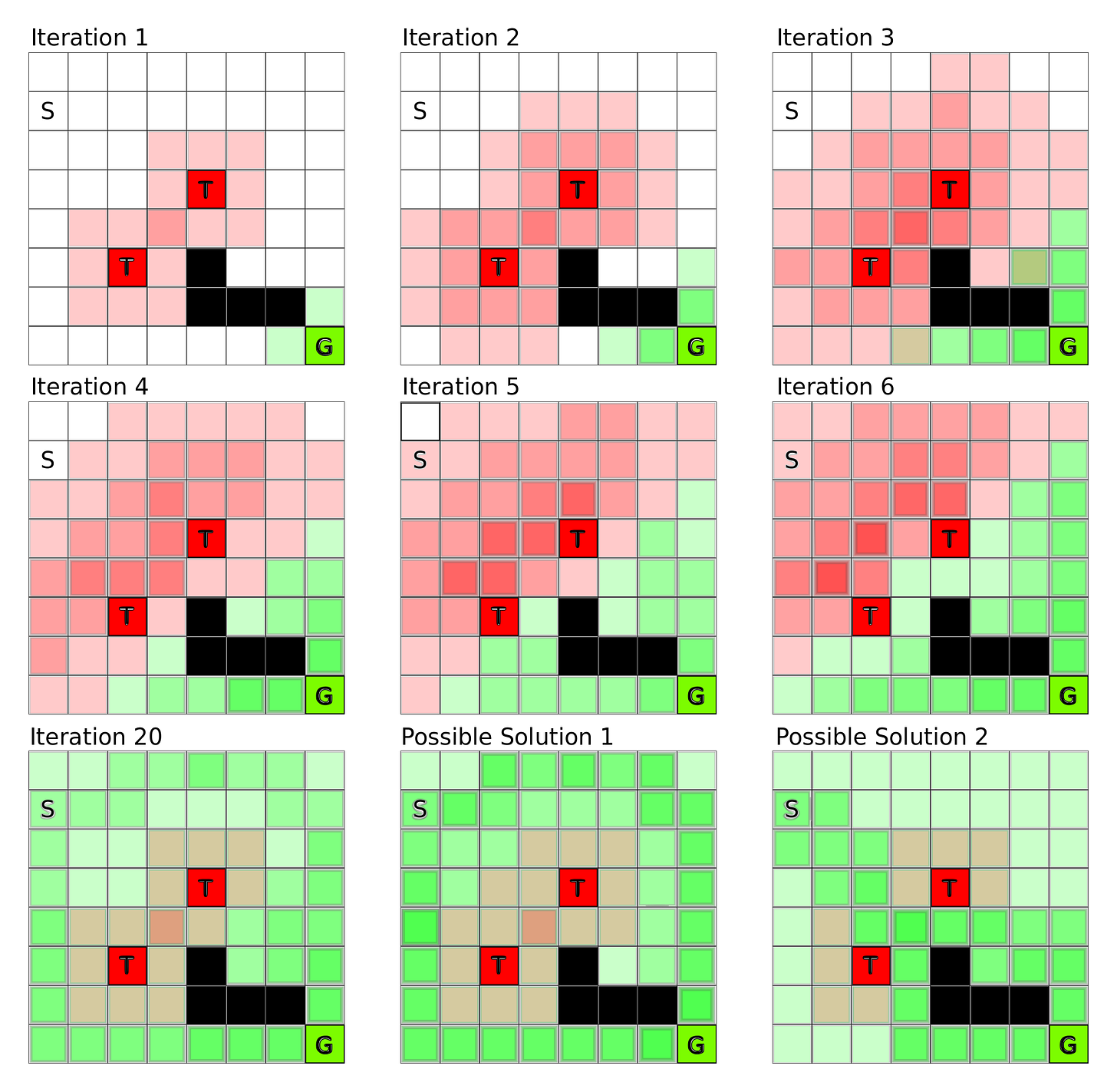

In Little Learning Machines, players are exposed to a collection of puzzles and a UI in the cloud that allows you to configure the learning process. And for those of us who know a thing or two about machine learning, it will all look rather familiar. The puzzles players face off against share similar properties with many of the formative examples anyone who has read a textbook on reinforcement learning will have encountered. Complete with a discrete grid structure with objects of relevance and goals that are to be satisfied. But in each example, the goals are defined, and the rewards are identified, given the focus is on teaching you how the underlying algorithms mathematics work. Little Learning Machines takes this concept, and turns it into a puzzle.

And this is for me the most interesting aspect of the game, in that players need to be able to train an Animo relatively quickly, within 30 seconds or a minute or two, and this creates a big technical challenge of ensuring the underlying AI infrastructure is sufficient to is the challenge of making this process fun without it becoming too frustrating.

Dante Camarena:

We came up with with a metric that is like non-standard for - I mean maybe I haven't read enough papers *laughs* - but there's like a metric that we used which is ‘Time to First intelligence’. Which is really just how long can you ask the player to wait before they see something cool happening - which at the beginning, when we started working on this was like, 5 minutes *laughs*. Which was a lot!But later on we were able to get it down to like 20 seconds which was closer to where players were like ‘oh, it's doing a thing!’. Before, they kind of thought that they were doing something wrong. So that was like one of the metrics, but then on the other hand when you're like ‘oh uh… how can it do anything that you set it to do well?’, that's the other metric which is ‘Maximum Intelligence’.

So the entire point of this game, or like the entire balancing of the hyper parameters [of the RL algorithm] is to find like a good balance between ‘Time to First intelligence’ - so you want the agent to learn quickly, but then you also balance that against how much can it learn overall (i.e. ‘Maximum Intelligence’).

Training Animos

The AI pipeline hidden within Little Learning Machines is certainly one that most developers won't have put into their games before. Given most games that adopt machine learning for in-game behaviour will be pre-trained: they're trained during development of the game, or in some cases they're developed outside of the game. For example back in episode 60 of AI and Games, we looked at the Drivatar AI in the Forza series. The Drivatars are being trained to learn from you as you play the game, but while the earlier games trained the Drivatars on your Xbox, the more contemporary versions are trained outside the game on an Azure server. In fact, if you pay attention before a race starts, you can see the trained models being downloaded onto your device before the race starts.

So for Little Learning Machines to work, you need more than just the code to run a trained network - what we would typically call 'inferencing' - you also need an entire learning environment running underneath the game as you play it. The actual training system relies on the Unity-ML Agents toolkit: a library first released in 2017 that provides users with the ability to train AI agents using reinforcement learning in the Unity engine. The game relies on the PPO or Proximal Policy Optimisation algorithm to train the Animos, which once you configure the environment, it runs the actual training using an external Python system, given it's reliant on many contemporary machine learning libraries and tools available in that language.

But while Unity's ML-Agents works pretty well, it's not built for use in real-time in a game. Rather, it's designed for use during development. As such, the dev team had to reproduce much of the underlying guts of the ML-Agents toolkit to work within the game while you run it as a playable executable. In fact, eagle-eyed players will have noticed that the first time you start the game, it spends a couple of minutes installing Pytorch and setting up the virtual environment it needs to train the Animos as part of core gameplay.

Dante Camarena:

So at some point there was a lot of decisions to make, and it always came out to these decisions of using something that already exists or trying to make it ourselves. And all at the same time like we need to prove that the concept works.So the first thing we did was use ML-Agents like straight up: using the standard pipeline and that worked. It took too long - it took like 30/40 minutes to train - but it required using the unity Editor. So the game only really ran inside the ML-Agent workflow which is a standard agents workflow, and it proved that it was possible and then we had the choice of whether we we rewrite it from scratch - it's like no we're on a deadline here - so let's move some of this around and then okay well now we don't have to do it in the editor we can do it in this like standalone version.

Oh but now the game design is kind of questionable. We need to iterate on the game design and we have all this like logic that we have to write. Let's just write it using C#, and so we wrote the grid system and then items like trees and you can plant them, and there's fire and the fire spreads on the trees, and like there's a lot of gameplay mechanics. [We were aiming for] expressivity; trying to do complex like mechanics so that the world didn't just feel like a toy.

So trying to get that done in time kind of led us to have all this bunch of logic in C# but then we had this other concern, which is now it's taking too long to train and that was kind like the bottleneck of it all. That's when we did this system to transition the logic still doing it in C#, but not *inside* Unity. To kind of cut all of that overhead.

If you don't mind me telling a bit of a personal story here, one developer were working with his name is Anuj Patel and he was a very like iterative kind of developer. He liked making games and he really believed in this project. He joined towards like the middle of the project, and his work was taking all this logic and refactoring it and stripping out all the unity dependencies. We needed to write tests for it, we needed to make it into this DLL, and he said that this was excruciating and boring because it wasn't like… it didn't feel like actual game design. It didn't feel iterative or interactive and it was kind of annoying for him. But he did it, and he managed to get it all done by the time that we had to get this [Steam] NextFest demo out, and then he went on vacation.

While he was on vacation we managed to finish the integration on the Python side, and thanks all the work he did we were able to combine those two things and that's where we got our like amazing 10x speed up because of that. The unfortunate thing is while he was on vacation, he passed away. So he never got to see the results of like months of gruelling work to get these two things connected.

With this all working, it still leaves one big issue, and that's the hyperparameters - the specific training settings used by the algorithm itself. While players configure the Animos learning problem in the cloud, they each run the same PPO algorithm under the hood, using the same hyperparameters.

Little Learning Machines biggest selling point is the idea that you can complete this in real-time and so a lot of trial and error during development, was ensuring the hyperparameters allowed for agents to learn quickly, but without risk of them never learning anything useful, or equally having the issue of 'overfitting', whereby they can only solve the problem you given them in the cloud, and their knowledge doesn't adapt to the actual in-game puzzles.

Nick Counter:

So each of the Animo’s have like fairly consistent hyperparameters - some are slightly different because some of them have different observations. But there was a moment like two months before we shipped the game where we designed a hyperparameter panel and we're like ‘oh we could just ship with this’ but then we realised this is too much.

Designing Puzzles for Learning

Pietro Gagliano [Co-Founder, Transitional Forms]:

There's not only creative space for the player but creative space for the agents themselves, the machines. Sometimes you'll train one of the machines for a particular task and then you're seeing them apply that skill set to another problem.

There’s creative space for the little creatures and that's part of the fun of the game; watching them figure things out, and the surprise and delight that comes from problem solving from something that you never really planned for.

And so with the technical architecture in place, the next big challenge was in building puzzles for the game. But this is a challenge that emerges in multiple forms, both in terms of trying to come up with puzzles that will encourage players to try different training configurations for the Animos, but also in ensuring that players are actually understanding what's happening under the hood and can adapt to suit.

Tommy Thompson (y’know, me):

I think the dogs are actually a really nice touch because you're like ‘oh cool I'll go and pet a dog… oh no the dog's moving now’, and of course, critically, you made a key challenge of the game petting a dog. Because the the internet demands if there's a dog in your game you got to pet it, right? So what was that process like of putting all the puzzles together? From this conversation it sounds like all of you chipped in somewhere everyone and had something to contribute to this whole process.

Nick Counter:

Iteration is the key. We spent a lot of time working on this this project, and at the beginning - like Dante mentioned - we didn't necessarily know how smart they would be. So we couldn't potentially design - like we had ideas of how smart they could be so we could have ideas of like the difficulties of the quest, the kind of tools they could use.

Once we proved that they can pick up an item and use that item on something else, and navigate a space, and then even proving that oh they can choose between multiple items in the right context. [Once we got to] that point then we could start thinking about what kind of items do we want to include, and then the hardest part - which we were iterating up until the end - was the order in which we try to introduce players to basic ML Concepts.The goal was always if we can get players to get up to my level of understanding - cause I’m an amateur *laughs*, I'm not an engineer - and if we could get people to understand these things to the point where I can then would be kind of a success.

Seeing the Animo do different kinds of tasks are also rewarding in different ways. Seeing an Animo water a flower is one thing, seeing one destroy something, seeing it manipulate its environment is very satisfying. We have an item, the ‘blockmaker’, which it can use to make bridges - which inherently is one of the most satisfying things to train. You literally see it making decisions and manipulating its environment which seems for some reason a bit more intelligent than other behaviours, even though it's kind of doing the exact same kind of thing.

So it was an iterative process of when do we introduce these items, and how complex are these items. Later in the game like all the final quests are multi-Animo quests where you get them to collaborate. It's very hard, so we iterated all the way up till the end to get that working well. But then going all the way back to the beginning, like we were always around a whiteboard brainstorming what kind of items could we use? What could these items be used for? So we're all part of that process.

One final thing is we have a really talented artist Reese, and he designed all these really cool styles for levels, and so it was kind of also baked into like when we want to reveal specific environments and what kind of item do we want to use that's accurate to this environment? Or will the environment kind of like help you understand what is supposed to be done here.

Dante Camarena:

And also like Fiona was like ‘Can we have pumpkins for Halloween? Can we have like Snowmen for Christmas?’, and it's just like okay *laughs*.

Fiona Firby [Community Manager]:

I love a good theme pack so I'll never never shy away from a good holiday theme!

Tommy:

You got to plan that in there, right? You got to make sure you got it in there in advance. *laughs*Fiona Firby:

Transitional Forms, especially going into this game, had this kind of empathy for the machines to some to some extent. Like that was a really huge goal for this was to somehow ensure players empathize. But in order to empathize I think you need to learn about something, and the more insight that you have into specifically the process that they’re going through, or the different actions they're taking in the the black box to some extent, the more understanding you can have and potentially the more empathy you can have to these creatures that you're going to literally throw in the garbage as soon as they don't do what you tell them!

Nick Counter:

When it comes to all those different things, a big thing that we included in the end was when you're training, when they receive a reward in the cloud there was like a long period of production where you didn't see the reward pop out of their head when they took the proper action. And just like the smallest things when we like finally got it in was just like ‘oh now like people can see that, oh this a reward is tied to this action they took’, and like that in itself seems like the simplest thing to us to some extent but without it like people were completely lost.

Fiona Firby:

It's no secret that training an AI agent, there's a large barrier to entry if you are not familiar with what is going on, and what all this crazy technology is. I don't come from a technical AI training background, so when I joined the company and found out about Little Learning Machines and how it was working I was like ‘oh my goodness, like this is crazy’ and like… crazy that I can explain this to people after playing this game and *still* not having formal educational and training.

I think there's a real Beauty in the fact that you can turn on this game, play it and just play it for the cute purposes: play it because you want these machines to succeed, and you want them to grow, and you want to see what happens, and you want to explore the world. But at the same time, afterwards when you're explaining to someone how [you] got a machine to learn how to cut down a tree, you're quite literally explaining the process of training those agents.One of the things that we did because there's such an educational component of this game, was we made a learning companion which is a lesson PDF that can be provided to educators to help integrate classroom learning with Little Learning Machines. Because again, barrier to entry is high, and it's scary if you're just looking at this wall of code. So by having this kind of cute, cozy, friendly approachable system it just helps get the familiarity out there.

Like these systems, these processes, they're not going anywhere. They kind of are the future. So getting more people exposed to it is so important, and in a way that shows you empathy, and makes you emotionally invest in the characters, I think is just super cool.

Closing

Little Learning Machines is certainly something unique in the gaming space: it's not often you see game that tries not just to apply AI in a unique way that - certainly from my perspective - is fun to tinker and play with, but earnestly seeks to teach its players about how the underlying technology works.

This episode is of course, expertly timed, as Little Learning Machines is receiving a big content update on September 5th, with a stack of new islands to visit and quests to complete (18 of them in fact). Critically, this update doesn't just revisit existing mechanics with new twists, but it also provides new routes of progression by helping making the learning curve for training your Animos a little smoother. Plus there's six new costumes as well, always nice to have some fresh cosmetics. A huge expansion to the game at no extra cost, go check it out over on Steam.

In the meantime thanks for checking out this episode of AI and Games all about Little Learning Machines, and a special thanks to Dante, Fiona, Nick, and Pietro from Transitional Forms for sitting down to chat with me about their experiences in building the game. Plus we have a dedicated episode of the Branching Factor podcast where Dante, Fiona and Nick chat with me some more about their experiences in a slightly less formal setting.

Additional Links

Little Learning Machines is available right now on Steam: https://store.steampowered.com/app/1993710/Little_Learning_Machines/

Find out more about Little Learning Machines via the website:

https://www.transforms.ai/little-learning-machinesLearning Companion PDF:

https://www.transforms.ai/learningGDC Postmortem Talk:

https://gdcvault.com/play/1034597/AI-Summit-Little-Learning-Machines