Is the AI Winter Coming for Games? | Weekly Newsletter 05/06/24

Not quite, but let's unpack this some more.

The AI and Games Newsletter brings concise and informative discussion on artificial intelligence for video games each and every week. Plus summarising all of our content releasing across various channels, from YouTube videos to episodes of our podcast ‘Branching Factor’ and in-person events.

You can subscribe and support AI and Games right here on Substack, with weekly editions appearing in your inbox. The newsletter is also shared with our audience on LinkedIn.

Hello all, and welcome to this weeks AI and Games newsletter. This week, I wanted to unpack a point that was raised in my recent interview on Eurogamer, but first let’s get into announcements!

Announcements

Some quick announcements on all things AI and Games:

Volume 2 of the Game AI Uncovered book series just launched yesterday on June 4th. It’s now available at all major book retailers. Plus you can grab it at the official Routledge publisher page. Use the code DIS20 for 20% off!

Tommy is going to be on the road for the next couple of weeks, first hosting a panel, and delivering a talk, at the AI and Games Summer School (late registration still open). Then you can catch him at Develop:Brighton (early bird registration closes today) with his hype-free overview of generative AI for video games.

The recently announced Goal State series from AI and Games is now seeking sponsors to help bring this project to our audience. If you and your team are interested in supporting Goal State, and bringing your brand to the AI and Games audience, then message Tommy directly for more information.

Unpacking the AI ‘Winter’

Last week I was happy to see a new article on popular gaming site Eurogamer, in which I appeared throughout courtesy of an interview that the author Ed Nightingale conducted with me after my talk at the London Developer Conference.

The article was really good, it seemed well received by those who read it, and I feel it did a great job of representing my thoughts on the subject of AI for games, particularly around the current conversations surrounding generative AI.

However, I was surprised when it got out there, that a recurring conversation point I saw across social media was my comment discussing the ‘AI winter’. This is a term that - certainly among AI circles - is reasonably well known, but I was intrigued to see how people reacted to this notion, and it led to some discussion over whether winter is coming (ugh) for AI in the current market.

So let’s unpack what this means, why I brought it up in the interview, and whether I think we need to dust off the snow gloves and big puffy jackets.

What is an AI Winter?

The term ‘AI Winter’, is often used to describe a period of disillusionment that emerges among businesses, and other stakeholders due to the current limitations of artificial intelligence technology. While no doubt many in the AI community will argue about the start and stop points of each instance, it’s largely agreed that we have had two ‘big’ AI Winters already, with some small ones in-between.

The term itself dates back to the 1980s, and the first documented example is credited to Drew McDermott during the opening remarks of a panel discussion at the AAAI (American Association of Artificial Intelligence) conference in 1984:

Drew McDermott: In spite of all the commercial hustle and bustle around AI these days, there’s a mood that I’m sure many of you are familiar with of deep unease among AI researchers who have been around more than the last four years or so. This unease is due to the worry that perhaps expectations about AI are too high, and that this will eventually result in disaster.

To sketch a worst case scenario, suppose that five years from now the strategic computing initiative collapses miserably as autonomous vehicles fail to roll. The fifth generation turns out not to go anywhere, and the Japanese government immediately gets out of computing. Every startup company fails. Texas Instruments and Schlumberger and all other companies lose interest. And there’s a big backlash so that you can’t get money for anything connected with AI. Everybody hurriedly changes the names of their research projects to something else. This condition, called the “AI Winter” by some, prompted someone to ask me if “nuclear winter” were the situation where funding is cut off for nuclear weapons. So that’s the worst case scenario.

I don’t think this scenario is very likely to happen, nor even a milder version of it. But there is nervousness, and I think it is important that, we take steps to make sure the “AI Winter” doesn’t happen-by disciplining ourselves and educating the public.

The Dark Ages of AI: A Panel Discussion at AAAI-84

McDermott, Waldrop, Schank, Chandrasekaran, and McDermott, AI Magazine (6) 3, 1985

Now ironically, McDermott stated there wouldn’t even be a ‘milder’ version of this scenario, only for it to come to pass mere years later. The first AI winter is largely acknowledged to have occurred between 1974-1980. While the second is considered to have ran from 1987-2000. In fact, when I began my journey in grad school learning about the broad spectrum of AI methodologies in 2004, we were only just shaking off the snow from our winter gear. As machine learning was still considered largely unreliable in the myriad problem sectors we now take it for granted. What a difference a decade or two can make.

The genie is out of the bottle, but the genie’s actually a bit shit at his job.

Why Do AI Winters Happen?

So you might be thinking, who is becoming so disillusioned that the AI winter even occurs? While it may spread into many corners of the space, be it the engineers trying to adopt it in practical scenarios, to the researchers who are trying to explore new territory, the true catalyst of any AI winter, is whoever is holding the purse strings.

First, it’s important to recognise that historically the bulk of development of artificial intelligence systems was conducted by universities, who to this day rely heavily on research grant funding. These grants are often financed by the likes of governments and international political bodies such as the European Union. But also, computer science and in turn AI have been intrinsically linked to the military industrial complex since their inception (DARPA is historically one of the largest investors in AI research in the US). Both of the previous AI winters have been triggered in-part by disillusion in the efficacy of the resulting AI systems of their era, which ultimately affected the amount of money being spent in specific sub-disciplines by government funding bodies. In fact, per the comments made by McDermott, I’d argue this is what led to an increasing number of sub-disciplines emerging. Notably the increasing adoption of the term ‘machine learning’ in the 1990s and early 2000s to differentiate a suite of algorithms and approaches from more traditional symbolic, rule-driven, logic that dominated AI in the 1970s and 1980s - and still largely dominant when we think of AI in video games today.

The second facet to this is large corporations that invest heavily in the current state of the art, only for its practical limitations and cost-effectiveness to come into question. This was a huge factor in the second AI winter, as many companies that had invested in ‘Expert Systems’ (the big AI fad of the 80s) subsequently went bust. Meanwhile many large players in the tech sector who had shown interest, notably Xerox and Texas Instruments (yeah, the calculator people), subsequently walked away - a point that McDermott deemed fanciful a mere three years prior.

The Third AI Winter?

It’s funny how easily one can take McDermott’s opening statement in 1984 and transpose it onto the current state of artificial intelligence. Of systems failing to live up to the hype that is driving investment, of backlash against investment in these systems given they fail to achieve the desired intent. I can certainly see a reality where all of these aspects come to pass. But in truth, I sincerely doubt a third AI winter is upon us, but rather… a cooling off period. An ‘AI Autumn’ perhaps?

It’s safe to say AI is currently in a boom phase right now. In fact, in some circles the past decade has been described as an ‘AI Spring’, given we’ve came out of a protracted winter that last the best part of 20 years. Looking back now to when I started grad school in the mid-2000s, I can totally see the rationale behind this. The introduction of Deep Learning in the early 2010s changed the face of the industry drastically. Significant gains have been made numerous problem sectors that seemed fanciful when I was in grad school in the late 2000s. Especially when you consider that progress in areas such as text, image, and voice recognition, speech synthesis and complex and long-horizon problem environments such as games like Go had been very slow across the 90s and 2000s. The idea that these problems - as they were originally conceived - were largely solved in less than a decade is an astronomic step forward for the field of AI.

We’ve seen this particularly in games, and it’s a point I’ve raised numerous times in the past year or so, be it in my recent London Developer Conference talk, or my AI 101 content on Machine Learning for Games, and the ways in which AI is adopted in game development in 2024. The impact Deep Learning has had on game development, most notably in AAA has been massive. But I often refer to this as a ‘silent revolution’, because the vast majority of players (and even people who work across the industry) had no idea that it was happening. As such, the conversation around how powerful, how practical, and how effective AI has been for games has been an internal discussion among industry practitioners. This has led to a big problem where now we have people outside of the industry dictating the narrative on AI for games, and it’s not only inaccurate, but it’s pissing people off.

The subsequent boom of Generative AI has only added to the excitement, and frustration, surrounding AI developments. As the likes of OpenAI, Google, Microsoft and more make all sorts of proclamations about the future of numerous creative sectors courtesy of generative AI technologies. It’s clear there’s no winter coming, as investment continues to be strong across the board for AI.

Despite this, I think the issue that really needs to be tackled, is that this is perhaps the first time where the broader conversation about capabilities of modern AI technology is being more broadly discussed. There is an AI Winter of sorts on the horizon, but not one we’ve had to tackle before. For the true arbiter’s of AI disillusionment are no longer the researchers, the investors, and corporations: it’s the general public.

The Court of Public Opinion

It’s safe to say that AI has an image problem right now. For all of the genuine innovation and progress we’re seeing in generative AI in particular, the ethical practices and applications of the technology has rightfully earned scorn and mistrust from some corners of society. Now this is a big problem, and one I plan to discuss in a future issue. But right now, for me, this is perhaps the linchpin upon which a reaction will begin to manifest.

Let me stress again, there is no AI winter coming. The genie is out of the bottle, and generative AI has became so widespread so quickly, with numerous ways we can apply it in our day-to-day lives, it’s nigh impossible to stuff it back in there.

But this raises the real problem: the genie’s actually a bit shit at his job.

Now this doesn’t surprise me. Generative AI such as GPT, or Stable Diffusion, or other large-scale systems derived from masses of (no doubt legally infringing) datasets is going to be a great tool, but an unreliable one. When consulting with studios, I like to suggest to clients and business partners that they should think of these systems not as Generative AI, but rather, as Derivative AI. It’s immensely useful in a lot of workflows - given it can speed up the production of monotonous material nobody wants to read, much less write - but it’s really not safe for public consumption. It lies, it makes mistakes, and cannot replace a human in any meaningful production process.

I wouldn’t trust generative AI to write this newsletter, or any of my work on YouTube and the like, given it’s fundamentally lacking in so many crucial areas. In fact I’ve tried this myself in previous years only to find the resulting outcomes to be just, well, terrible. Though I will say it’s high time I come back and try it again with a 2024 language model.

And I think this is part of the backlash that is emerging. Not just that it’s having an impact on people’s livelihoods, but also that it’s just fundamentally not good enough for 90% of the things that companies seek to use it for. Its capitalism at its finest as everyone jumps on the AI bandwagon, only they failed to understand (or no doubt just ignored) the limitations of these systems in the current form - because most investors aren’t going to be thinking about that, they want to see the line go up! As a result, we’ve had everything from Air Canada’s AI chatbot that lied about its company policies, and New York City’s official chatbot that was designed to help small business only to encouraging users to break the law, to MSN’s news aggregator AI calling NBA player Brandon Hunter “useless” after his sudden and tragic death, and of course as a Glaswegian expat how can I not mention the ‘Willy Wonka Experience’?

Don’t get me wrong, the advancements in generative AI are fantastic, but its the application that is the problem. For every considered, carefully produced tool or system designed to solve a very specific problem, we have (imperial and metric) tonnes of slop being pushed all across the internet. We have systems that clearly would fail most risk assessments for business applications being rushed out to disastrous results. Oh, and don’t forget people are losing the jobs as a result. No wonder people are pissed off.

Then of course stop and think about how this is intersecting with games.

Stop Me If You’re Heard This Before

We’re entering a new era of technology that’s going to change the type of games we make, influence the type of games we play, and ultimately lead to a new era of creator empowerment and ownership.

So hang on, am I talking about generative AI? Or NFTs?

As the conversation surrounding generative AI gets increasingly toxic, all the while companies are refraining from truly embracing it in their games, it’s all creating a simmering pot. The image problem for AI is bad right now, but I’d argue it’s even worse in games. The gaming populace already pushed back against NFTs - the ultimate manifestation of aggressive monetisation practices they’ve been subjected to for over a decade now - and given how little we’re seeing of generative AI in games that’s worthwhile, I can totally see how they’re pushing back against AI as well - even if a lot of that is rather misguided.

This whole point of gamers and the AI image problem is, as stated, a point I wish to return to in the future. But I think it speaks to the issue at hand. Whether it’s games or elsewhere in consumer products, a lot of half-baked, ill-conceived generative AI is simply contributing to the enshittification of the things they’ve invested in. It’s not the same pattern as before, but it’s markedly similar: we’re seeing a lot of arguments being presented about the potential of generative AI - much like we did for NFTs - but right now, there’s very little evidence that it’s bringing something that customers actually want. Meanwhile developers are playing with the technology and figuring out not just whether it’s viable, but also whether it’s even legal for them to use in the context they wish to apply it to.

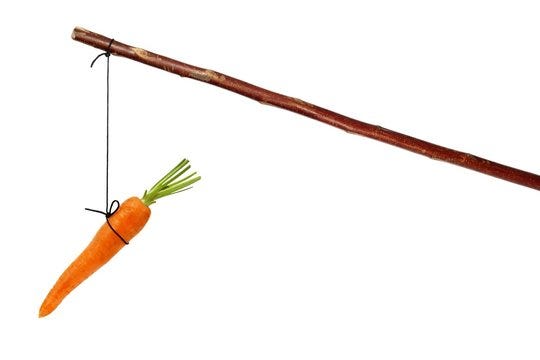

(AI-Generated) Carrots, No Sticks

To me this is all where the ‘AI Autumn’ begins to emerge, as a ‘cooling off’ period may soon manifest as consumers simply get fed up of what’s being shovelled at them. Gaming consumers don’t just talk with their wallets they, are increasingly more savvy to what’s being pushed upon them (even when their perspectives and politics are horribly misaligned, or flat out wrong), and at the end of the day we need to start seeing something emerge with generative AI for games that warrants all the hype - and that frankly isn’t going to happen. It’s all carrot and no stick. I mean there’s plenty of games out there doing just fine without generative AI, but as everyone continues to advocate for the technology, customers are beginning to see that the carrot was generated with DALL-E, and it doesn’t look all that appetising.

Of course any sea change is slow and gradual, it’s not going to change overnight. Meanwhile, I do think the AI industry as a whole needs to do a better job of growing up. There’s a lot of really bad decisions being made in the service of a naïve vision of a brighter AI-driven future. It’s not going to get there if you don’t get end-users an incentive to invest.

That’s Enough Outta Me (and Enough Outta ‘Him’)

Ooft, I have been rather grumpy in this weeks newsletter. But I felt like what stated was warranted. So I’m happy to get it off my chest. I can be a bit more upbeat next week for sure!

Thanks for checking in on this weeks newsletter. We have some fun stuff in the coming weeks: a report on the FDG conference, a follow-up to the FEAR retrospective, and one my biggest case study analysis I’ve ever conducted! Take care folks.