Crowdsourcing Intelligence in 'The Restaurant Game'

Winding the clock back to the early 2010s and the first example of crowdsourced game intelligence.

AI and Games is made possible thanks to our paying supporters on Substack as well as crowdfunding over on Patreon. Support the show to have your name in video credits, contribute to future episode topics, watch content in early access, and receive exclusive supporters-only content on Substack.

If you'd like to work with us on your own games projects, please check out our consulting services provided at AI and Games. To sponsor our content, please visit our dedicated sponsorship page.

Earlier this year we released the F.E.A.R. Retrospective: a deep-dive into the creation of one of the most celebrated first person shooters of all time. What helped put F.E.A.R. on the map in the eyes of many players, as well as game developers, was the underlying AI technology that powered the enemy combatants throughout the experience.

This AI planning technique took inspiration from a corner of AI research that until that time, had very little impact on the video games industry. And in our original video we learned about how it all came together courtesy of none other than Jeff Orkin: the AI programmer on F.E.A.R.

But while F.E.A.R continues to be held in high regard even today, it was Orkin's last contribution to the franchise, and he then disappeared from game development. Having been credited on several titles at Monolith, and being heavily involved in the AI of both No-One Lives Forever 2 and later F.E.A.R., Jeff left the games industry in the summer of 2005, only to return more recently with his new startup called BitPart, where they're taking a new approach to building interesting and dynamic non-player characters.

But critically, I wanted to figure out what happened in between. Jeff left Monolith mere weeks before FEARs release to pursue a career in grad school. For this episode of AI and Games we're going to answer a question that sat in the back of my mind for close to 20 years: what happened to Jeff Orkin?

Heading to Grad School

Now look, let's not bury the lede, Jeff's fine. In fact I can attest to this having met Jeff in person for the first time at the Game Developers Conference (GDC) earlier this year - and this was after I had ran several hours of interviews with him for our F.E.A.R. retrospective. So I can say quite confidently, he's doing well, he's having fun, and he's working hard on all the cool stuff happening over at BitPart - which we'll get to in a minute.

But when I reached out to speak to Jeff about interviewing him for the retrospective, I explained that I wanted to know more than just his experiences working on the game. I wanted to find out where his career had taken him, and try to set the record straight on a man whose contributions to the field had largely been mythologised across a lot of media surrounding AI for games, including my own. I had pieced together his career outside of the games industry, which is polite way of saying I stalked his LinkedIn profile, but I wanted to hear the story of how it all connected. And in truth, he's been on a path of discovery ever since completing his work on FEAR, that has led him back into the industry and the formation of Bit Part.

So of course the big question on my mind, was why leave? The vibe within the studio suggested that Monolith knew they were onto a hit, and the press and sales not long after release only reinforced this. So why give it all up to go back to college and enter grad school?

Jeff Orkin: It was a very kind of backwards decision to go back to grad school after, I mean, I was kind of at my career high - but I think it was a combination of things. So for one thing it was just this eye opening experience working on planning in F.E.A.R. and starting to dig into academic literature, and realizing that there are people exploring this problem more deeply. I felt like I was only scratching the surface and didn't like, you know, I learned AI on the job but I felt like I didn't really know AI. Of course it took getting into grad school to realize at that time no one really knew AI, like people were exploring a lot of things but there was a lot not figured out. I mean that's still the case in some degree.

So part of it was the curiosity, part of it was also in the kind of research I was doing on the job. I was coming across a lot of papers that were inspiring me coming out of the MIT media lab from Bruce Bloomberg's group. That's the group where Damian Isla was doing his Master's work.

[Editorial Note: Damian Isla is a game developer who is the co-founder and design director of ‘The Molasses Flood’ games studio. He is largely known in game/AI circles for being the AI lead on Bungie’s early entries in the Halo franchise].

[The AI planning tech in F.E.A.R.] GOAP is a simplified version of STRIPS planning, but then there's the whole agent architecture that supports that. That was inspired by the C4 architecture developed in Bruce Bloomberg's research group. So reading that stuff just made me really want to, you know, go and and join that group and and be part of the evolution of what they were doing.

I'd been working on F.E.A.R. for a while and had been presenting at conferences about what we were doing, and I reached out to Bruce and said “hey I'm interested in applying to your group”. He wrote back, and it was this bittersweet email. He was like “oh yeah I've seen your presentation and love what you're doing. I'd love to have you in my group, but I'm leaving…”.

While Blumberg was moving back into industry, he introduced Jeff to a number of his colleagues who might be interested in having him come on board. This led to him meeting Deb Roy, Roy was at the time an associate professor at MIT who had previously defended his PhD thesis at the institution in 1999. Roy's research in the early 2000's had been heavily focussed on natural language, with the likes of speech recognition and critically building interfaces that would allow for systems to build meaning and understanding from human language.

And it was his interaction with Roy that led to what at first may seem like a deviation from Jeff's previous work, but as we'll see later, will prove to have a huge influence on his eventual return to game development.

Orkin: Deb had just had his first child, and his research vision was pivoting to trying to understand how children learn language. Trying to understand how that could inform how we could get machines to converse.

You know like any new parent, he installed 11 cameras and 14 microphones in his ceiling and recorded everything his son did for three years. And so he had a complete record of everything that happened in his home with the three and later four people who lived there. And from that the research group was doing really interesting research in tracking language development.

Orkin: I went to Grad School in 2005 and this was like kind of too early for the cloud and doing that massive amount of audio and video required the grad students to cart around physical hard drives going back and forth to Deb's house. His house almost caught on fire at one point due to all this equipment in his attic.

But anyway so the research group was trying to understand like if you had a lot of data about how a small group of people converse every day, could you learn how language develops and what words mean?

But I was coming in with this game development experience and Deb just kind of let me have kind of free reign on a parallel track that was exploring kind of the same problem, but kind of flipping the problem around. So I ended up creating video games that would record human players conversing and interacting in first-person 3D, and I would log every action they took and every word they said to each other by typing text or we speech-to-text.

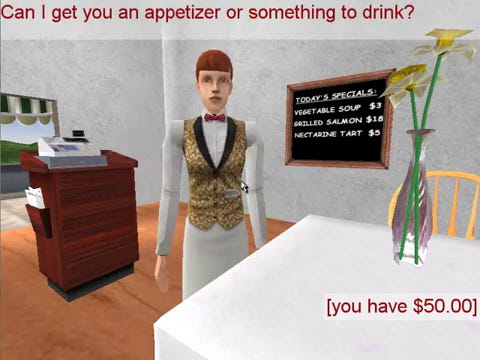

But in order to build a game around conversations, and also critically have it that one person can built a huge chunk of this experience, it needed to have some sort of constraints put upon it. The resulting project, was 'The Restaurant Game': a small playable experience built inside the Torque game engine, that allowed players to assume the roles of customers or waiters, and by doing so helped build a framework around which players could initiate conversations.

The Restaurant Game

Orkin: There were about 40 interactive objects, like vases on the table and plates of food, chairs, a microwave, and a cash register. Every object had the same five or six choices of interaction: you could pick it up, sit on it, take a bite of it, and hand it to someone. We just wanted to see what do players do in this environment, and how can we learn how to understand language by the context in which that language is used.

The Restaurant Game takes the ideas that Roy had been exploring, but explodes it in scale. Rather than looking at a handful of people and how they handled speech, Orkin's project led to thousands of individual players participating in the project over a period of time. But also, it allowed for ease of accumulating data, given a lot less had to be transcribed manually. You could access the history of actions a player had tried to do within the game prior to an interaction, where they stood relative to one another, or messages that they typed to each other within the space.

But in 2007, The Restaurant Game didn't really look or feel like a traditional game, and so one of Orkin's biggest concerns in the beginning, was whether getting people to roleplay in a small online game would actually yield results.

Orkin: We weren't confident that anyone would play the game, because there wasn't really a game. It was just like ‘all right you're a customer, you're a waitress, come have dinner’, and you were anonymously paired online.

I was running all these game servers - again this was pre-cloud - so I had a closet the media lab with like seven old PCs that had been abandoned, and each one was running multiple game servers hosting I don't know how many people at once.

But it was also pre-social media, so I didn't really have a way to get the news out that there was this game that I wanted people to play it. I was just like emailing game developers I knew, researchers, trying to get featured on places like Hacker News or a news aggregation site like Digg.

And all of that grinding paid off, with over 16,000 people playing the game in the end. But what is the end result of getting all of this data? Would it even make sense? Even with the trappings of a game environment, it's easy for players to go off-piste. There was nothing constraining players from deviating from the transaction of a customer and waiter in the restaurant: they were simply told their roles, and then left to get on with it. So what did the end result look like?

Orkin: A lot of people went through the motions of what happens working in a restaurant. ‘Hello sir, welcome to our restaurant. Have a seat right here.’ Then they would take an order and serve food. There was a menu you, and you could get the chef to serve specific items through keywords, and there was a bartender. People would order their food, they would eat it, and pay their bill.

But the other half of the players did anything you could think of. They would, you know, flirt with the waitress. People would pick up the cash register and run out the door; robbing the restaurant. There was a guy who sent me a screenshot of how he had stacked like 17 cherry cheesecakes and climbed on the roof of the restaurant, and was celebrating with champagne. People would joke like ‘uh can you tell me where the bathroom is?’ because I hadn't built a bathroom into my little virtual restaurant. So there was a lot of fun ad-libbing of like ‘oh sorry it's broken you got to go outside, and pee in the back’ or whatever.

So the real trick next, is to reverse engineer from all of these interactions some meaningful results. What could be done with all of that data? Well, by lettings thousands of players participate in the game, in this environment, Orkin had effectively crowdsourced data on what is the range of possible interactions and narratives that could occur within the restaurant. Sure, it's not 100% complete, but it provides sufficient scaffolding for what would ultimately become the major contributions of his thesis, in that he could then build an AI system that acted as a narrative director: helping craft the story by recognising what the player doing, and having AI control the other player to support that narrative.

Orkin: So by the time I really had a good data set, it was 2009. Initially I was experimenting with language models, but at that time they were just ‘LMs’ not ‘LLMs’: there were no large language models. If you wanted a language model you would just train it yourself. There weren't like big pre-trained models.

So I was taking the data from like 10,000 game plays of The Restaurant Game, and training a language model that included actions as well, and just creating characters that could randomly interact with each other. It was very entertaining, but they would get caught in cycles, and there's a lot of incoherent interactions. It was kind of a neat toy problem, but not workable for an actual game.

So I started to realize I needed more semantics in the data I'd collected, and it wasn't going to be something that machine learning could just magically produce. I needed to somehow get some human input to explain that data, and that kind of inspired the the pivot in my research in 2010. I started developing tools that would let me hire anyone on the internet who was fluent in English to review these transcripts of gameplay in the restaurant, and explain what I called ‘events’.

So you were looking at like a timeline view of everything that happened in the game and you would place these spans of color representing events, and give them a label. Like someone's ordering food, or somebody's serving food, or someone's arguing about getting the wrong order. We would overlay these annotations onto thousands of transcripts and then there was another step of clustering dialogue.

In the data I collected I found that there could be hundreds of ways to say the same thing. You know asking for the check, we found like 136 different ways people word that. So we ended up with, I can't remember, maybe 85 different clusters of dialogue that we referred to as ‘speech acts’. Between the speech acts and the annotation of the events, we had enough information where now we could run a planning system - a little bit different from GOAP, maybe more similar to an HTN, but not quite - but it was a system that would pick an event and start automating characters. But it would also watch for what the player was doing, and try to match content we've seen in the past with some abstraction of the history of what's happened up to that moment.

By the time I finished the PhD - I defended in December of 2012 and then got the thesis signed off in 2013 - and by that point I could automate a waitress who would converse with you, and walk around, and serve you food. It would also react appropriately if you did something weird like sitting on top of the table or stealing the cash register or whatever. And that kind of planted the seeds for what I've been working on in the decade since.

From PhD to BitPart

So with the PhD complete, what next? Could this be a viable tool to start using in game development? I think despite how interesting the technology was, it would have been a tough sell around ten years ago. And this led to a Jeff's decade outside of games, as he recognised the value and potential of the technology he had developed in other business areas.

Orkin: My goal with the PhD was never to become a professor. It was to explore new AI technologies, and then I hoped to do something entrepreneurial after. So the way I pursued that was I got grants from the government, from the National Science Foundation, to commercialize my PhD research.

Initially the grant we got was to turn The Restaurant Game technology into an educational game that would let kids in middle school practice social skills; learn empathy through interacting socially with AI characters. We started down that road rewriting the AI system from The Restaurant Game to be able to run on the cloud, and integrate with Unity [game engine], and we made a bit of progress but it wasn't a ton of funding, and so progress was slow.

Then what happened was the Amazon Alexa came out, and all of a sudden everybody cared about conversational AI. Like in 2013 when I started Giant Otter, nobody cared about conversational AI. It wasn't clear that there was any business opportunity there. So we were like ‘where could you apply this?’. Educational games? Maybe practicing communication skills? And the thought was that could be the thing that would fund the core technology, and then eventually we'd bring it back to mainstream games.

I was very naïve. I'd never started a company before, and didn't really know what I was doing. So all of a sudden chatbots were becoming a big business - the earlier hype cycle of chatbots around like 2015 - and so given how slow we were making progress on turning our solution into something for games, and the fact that it wasn’t very clear how this was going to work as a business before we ran out of money, we just decided to pivot and go all in on the conversational engine that was unique to us. That could learn conversation from data, and power a conversation via voice or text. We just doubled down on that, and discarded the work we were doing in Unity.

That sent us into the direction of becoming a chatbot for businesses [company]. That got us acquired by Drift, which is a marketing tech company, and we became Drift's AI team and built the product that powered automated chat on big websites like Adobe, Pelaton, Bloomberg, and companies like that.

So you know, my thinking at Giant Otter at that time was like ‘oh well we've got to go where there's an opportunity’, and this will let us bring in revenue, maybe become profitable, and that will give us the opportunity to go back and experiment with these game things that are more risky. Business-wise that makes no sense, like you can't go in the direction of becoming an enterprise chatbot and then be like ‘oh and we're going to have a a new product for games’, it unlikely that's ever going to work. And sure enough once we went that direction, there was no coming back.

Now hey, no judgement here! I mean, I was literally a guy in finance for a short period in what now feels like a previous life. Cutting your teeth building a business, and learning to deploy AI at scale in the cloud as was needed at Drift, is all useful skills that will later have an impact on Orkin's future work.

And so now, in 2024, BitPart is a start-up that revisit many of the ideas from across Orkin's work, but at a time where perhaps the technology has no caught up with him. One of the underlying motivations behind The Restaurant Game was that in F.E.A.R. there are many AI systems working to achieve one overall net affect. In games even today you have a navigation mesh that is being used to dictate how and where a character can move across the space, you have a decision making layer, in this case Goal Oriented Action Planning, to select the actions the soldiers will execute, and then finally the bark and dialogue layer was there to help sell the effect, even if it was all smoke and mirrors and entirely fabricated. What if you could build a system that handles all of it at once? Spatial awareness and movement, action selection, and conversational dialogue, all as one system?

As Jeff explained to me in our interview, it was one of the first things he tried when at MIT. But quickly the range of actions, preconditions and effects was simply too large for a planner to encapsulate. Encoding this information manually, and then searching throught it would simply be impossible. The Restaurant Game took this one step farther, in that by crowdsourcing thousands of playthroughs, a knowledge base could be reverse-engineered, rather than crafted manually. But as discussed already, this too was massively time consuming - annotating all the playthroughs was an intense workload.

Orkin: You know, the whole thinking behind The Restaurant Game was, what could we get characters to do if we didn't need to by-hand come up with all of the behaviour and language, but instead just get a snapshot of ideas of what could happen in this restaurant environment. Well an LLM (large language model) is a snapshot of all the world's language. So after four years of Drift and playing with LLMs, I was starting to recognize that LLMs were an alternative to what I was doing with The Restaurant Game. That it was a new way to create many examples of the dialogue or behaviour that could happen in different narrative situations.

So after four years of Drift the AI team was in a good place, and I felt like it was probably a good time to move on and I started talking to my teammate Sarah Rudzki who is my co-founder of BitPart.

So interesting backstory there, Sarah is a theatre person. She studied theatre in undergrad at Dartmouth, and then became a theatre writer/director/producer at the Chicago Shakespeare Theatre Company. She started working with me on The Restaurant Game because I had to hire a lot of part-time, remote help through websites like Upwork to annotate data, or play the game repeatedly, or cluster lines of dialogue that seem to mean the same thing. Just out of luck I found Sarah who was looking for side jobs while working in theatre - where you don't make much money - and she got involved in data annotation, and it was clear right away that she was kind of overqualified for what I was having her do. So then she started managing all the annotators I was hiring around the world, and started you know collaborating with me on what could make the system better. How can we create better characters. So when I started Giant Otter, I hired her in as our first non-engineer as a project manager. Then she and I led the Drift AI team together, and and then after we'd spent some time at Drift we started talking about what do we really want to do that brings together my interest in games and AI and her interest in theatre.

There was just a pretty clear path to BitPart; leveraging what's happening now with LLMs as well as revisiting the same techniques I was using for The Restaurant Game, and bringing in AI planners - in particular HTN planners - and putting that all together. There are four of us that went from Giant Otter to Drift, to BitPart. With some VC funding we've now built the team up to ten people, and yeah it's really fun. We're experimenting with kind of the new way to create characters and games, especially focused on multi-agent interactions populating a space. Where a group of characters perform their roles together. What we're going for is a seamless mix of dialogue and action.

There's some kind of maybe unfortunate things that happened to us with BitPart. Like 2022 the economy was booming until it wasn't! I thought this is going to be easy, I'm going to leave Drift there are plenty of investors interested because we've had a successful exit previously, and we're just going to like raise a whole bunch of money, and build this great thing. I left Drift, the economy collapsed, all of the interest in the metaverse stuff kind of melted - which also kind of hurt our spin on things - and then the other thing that happened was that ChatGPT was released to the world maybe a month after we started BitPart. Maybe in some ways it stole our thunder a little bit, but it really changed people's understanding of what AI is or people's definition of what AI is.

There are a number of start-ups that then rush to market to say basically ‘ChatGPT is a character, and if you just stick that in the body of an avatar: voila! You have next generation NPCs. I don't agree with that perspective and at BitPart we're doing something quite different.

BitPart's AI tool was showcased behind closed doors at GDC earlier this year, in which all of the NPCs in a virtual saloon are derived from a large corpus of knowledge fed into a large language model. Character bios, scripts and dialogue from cowboy literature and much more besides. But in order for it to work in the game, it's reduced down into a planning model that can run seamlessly in the engine.

Naturally this is far from the final product, it's still in development, and no doubt things have evolved quite a bit since I got to see this demo from GDC, but fingers crossed we will have a follow-up on how this technology is evolving in a future episode.

Closing

Time is a funny thing, we might have an idea that we feel could change everything, but it could easily seem impossible on first passing, or indeed on the second or third attempt as well. It's a journey that anyone who has tried to build a business, build a community, or go through the perils of grad school will no doubt have encountered. But as you continue to gather knowledge, experience, and dare I say it a little bit of wisdom, sometimes you find yourself right back at that initial idea.

While I most certainly enjoyed the hours spent listening to Jeff's stories that we've recounted both in this video, and the F.E.A.R. Retrospective, the one thing that I've really taken away from this, is how the passage of time can often afford us perspective. I recall studying F.E.A.R. in grad school, and seeking to find my own way to address some of these issues. It went in a different direction, and one that I'm rather confident in saying was a dead-end. But it was exciting to hear how we share similar perspectives on many aspects of AI for game development. So I can't help but admire the persistence that Jeff has in returning to this idea once again, and taking everything he has learned over the past 20 years, all in an effort to give it another shot.

Related Resources

You can find out more about The Restaurant Game from the publications hosted at the MIT Media Lab: https://www.media.mit.edu/people/jorkin/publications/

Plus, check out the work Jeff is working on now over at: https://www.bitpart.ai/